Industrial Evolution – Helping Engineers to Slash Break-Down Maintenance Time with Gen AI

In-Short

CaveatWisdom

Caveat: If root cause during a machine break-down maintenance is not identified with-in time and proper procedures to fix the problem safely is not followed in a challenging industrial environment then they can lead to lot of production loss and create safety concerns for maintenance personal.

Wisdom: Expert level understanding and huge experience in interpreting the problem in machines is required to troubleshoot and fix machines. By leveraging cutting edge Generative AI and IoT technologies which help in understanding root cause and fix problems, break-down maintenance time can be slashed from days to few hours.

In-Detail

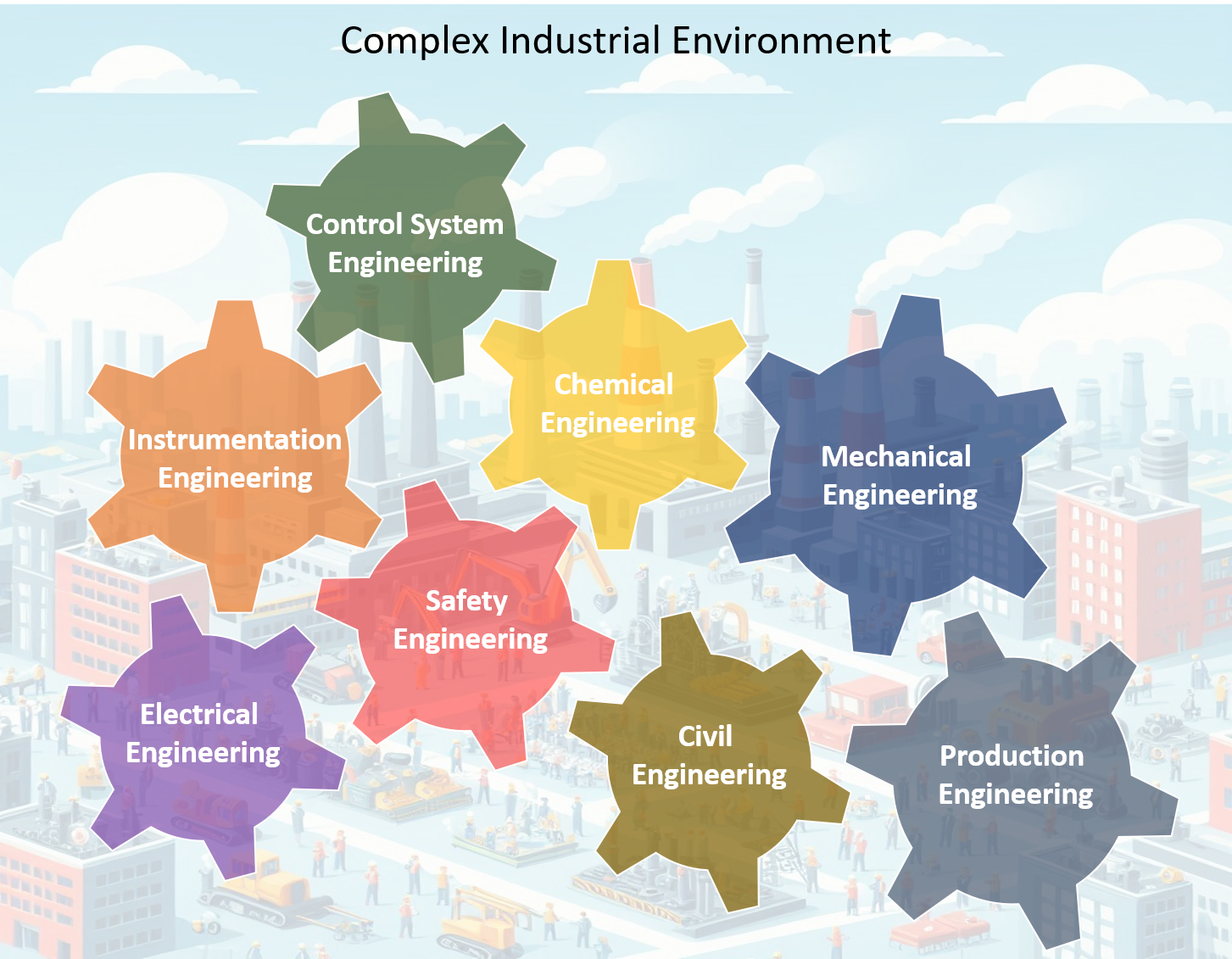

Let’s understand the complex industrial environment before we discuss the solution. To build and operate any major industry like Cement, Power, Aluminium Smelter Plants, Water Treatment Plants, etc. it involves many engineering disciplines like Process, Civil, Mechanical, Electrical, Instrumentation, Safety, Chemical and Control Systems.

For example, in a Water Treatment plant which supply water to entire city, it requires process and environmental engineering to treat water, chemical engineering for chlorination and sludge handling, Civil engineering to design and construct huge Clariflocculators, Aerators, Filter Beds, Sludge tanks and Pipelines, Mechanical Engineering for huge equipment like Mixers, Rotors, Pumping Motors, Valves, gates, etc., Electrical Engineering for powering the equipment, Instrumentation Engineering for measuring water parameters, control systems for controlling equipment. All the systems are interconnected and depend on each other’s function controlled by SCADA systems.

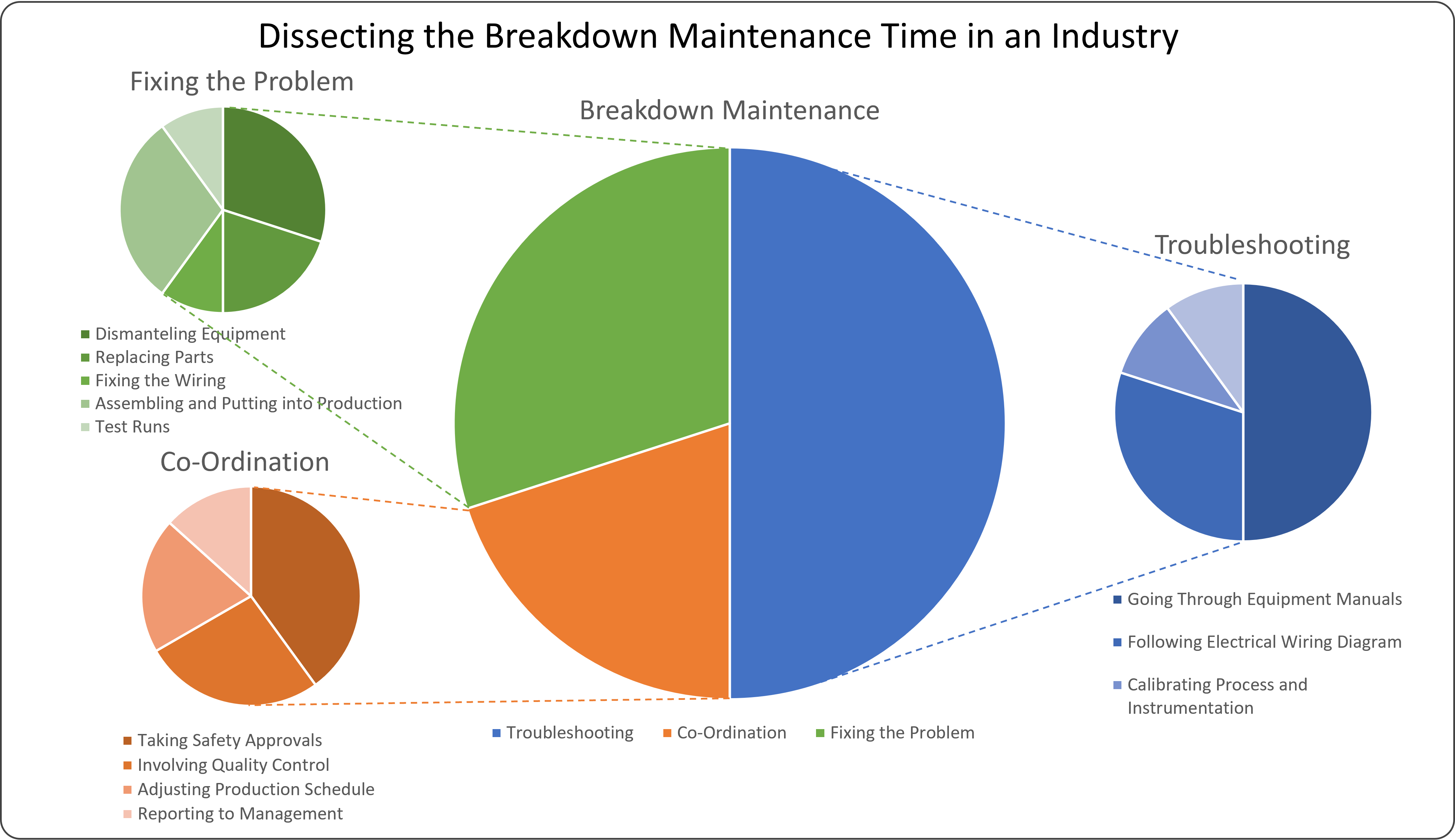

Dissecting Breakdown Maintenance Time

In a complex industrial environment where multiple systems are interconnected, a problem in any one system can affect the whole process of the industry, lets first look at some important activities performed by operation and maintenance engineers to do the breakdown maintenance when any problem arises.

- First, engineers must follow the safety protocols and shutdown the effected and interrelated systems which can affect the safety of personal and equipment.

- Co-ordinate with multiple stakeholders like production managers, quality control, logistics, leadership, safety team, etc., to plan and coordinate the maintenance activities.

- For troubleshooting go through lot of equipment manuals, electrical and control wiring diagrams, observing and inferencing parameters from SCADA systems, etc.

- By following proper safety procedures, Dismantle the equipment, replace the faulty parts, fix wiring, assemble the equipment back and do the test runs before getting into production.

When we dissect the total breakdown maintenance time and look at where major time is spent we find that most of the time goes in going through huge equipment manuals, wiring diagrams, co-ordination efforts and following procedures.

Solution to Slash Breakdown Maintenance Time from Days to Few Hours

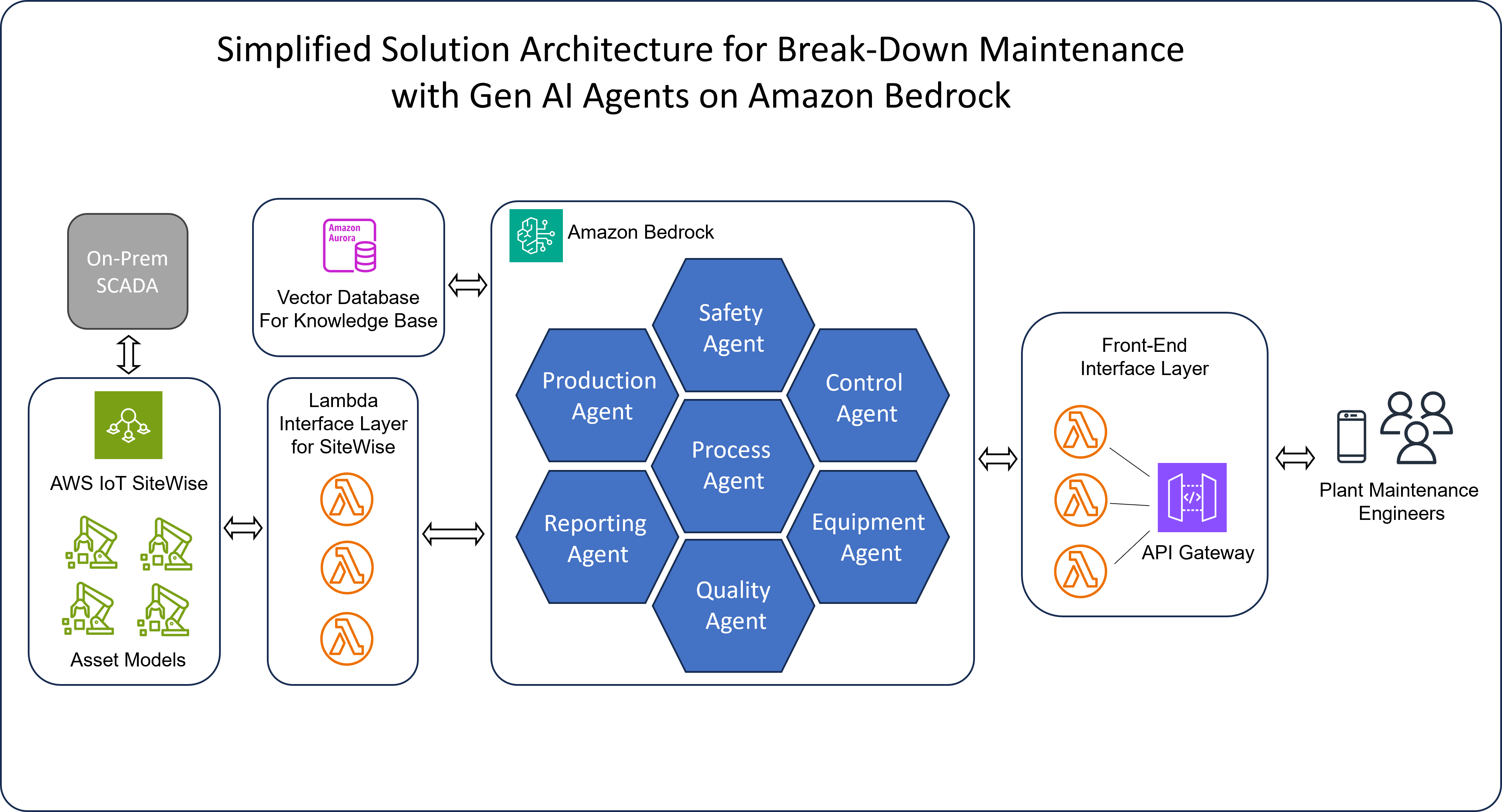

The solution involves deploying multiple Artificial Intelligent Agents that can coordinate with each other and assist engineers in troubleshooting the root cause of the problem. These AI agents can analyse data from various sources, such as equipment manuals, electrical and control wiring diagrams, and SCADA systems, to quickly identify the issue. By doing so, they eliminate the need for engineers to manually sift through vast amounts of information, thereby saving valuable time.

Moreover, these AI agents can facilitate better coordination among multiple stakeholders, including production managers, quality control, logistics, leadership, and safety teams. This ensures that maintenance activities are planned and executed efficiently, minimizing downtime and production loss.

By following proper safety procedures, engineers can dismantle the equipment, replace faulty parts, fix wiring, reassemble the equipment, and conduct test runs before resuming production. The AI agents can also provide real-time guidance and support throughout this process, ensuring that all steps are performed correctly and safely.

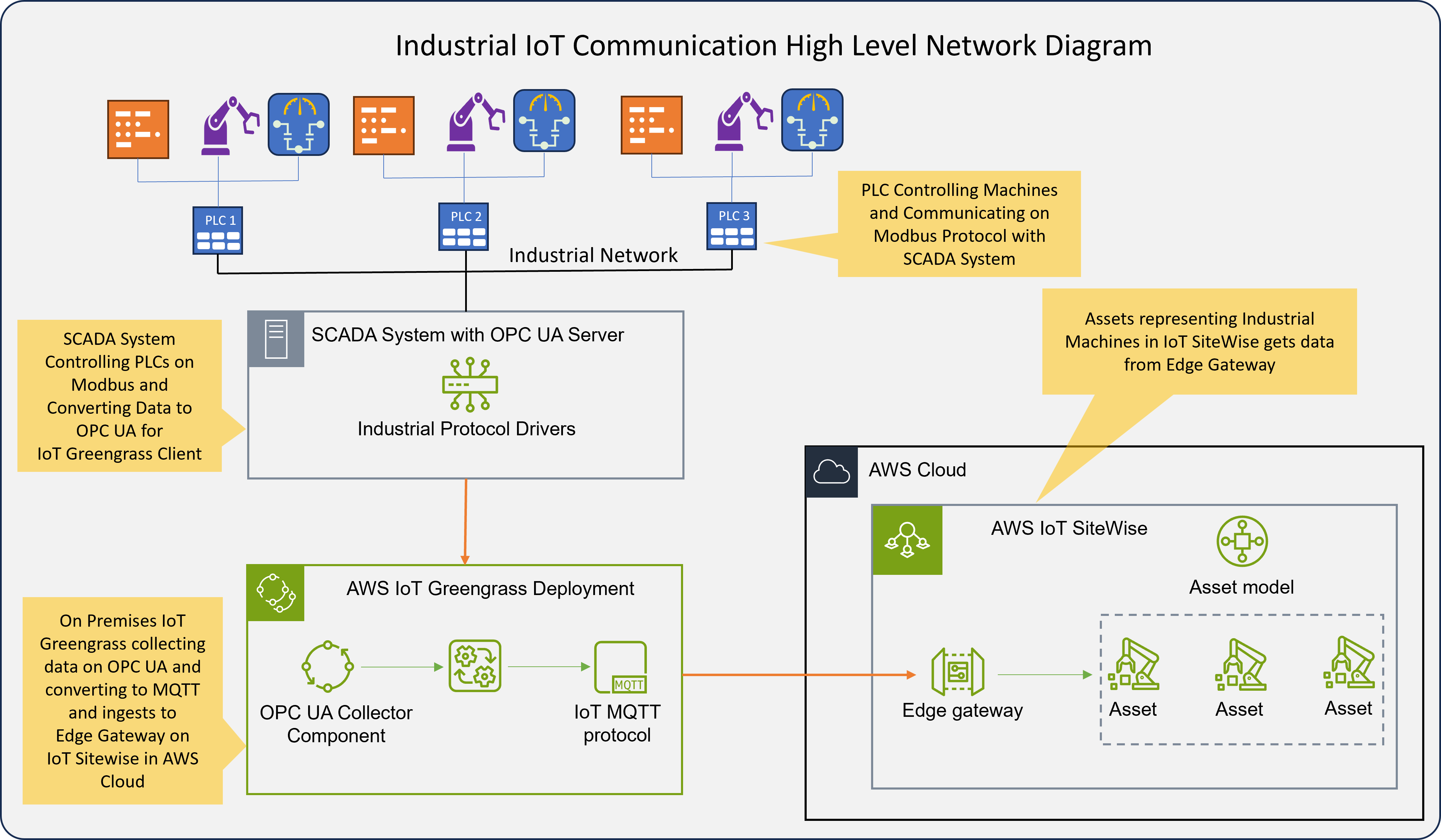

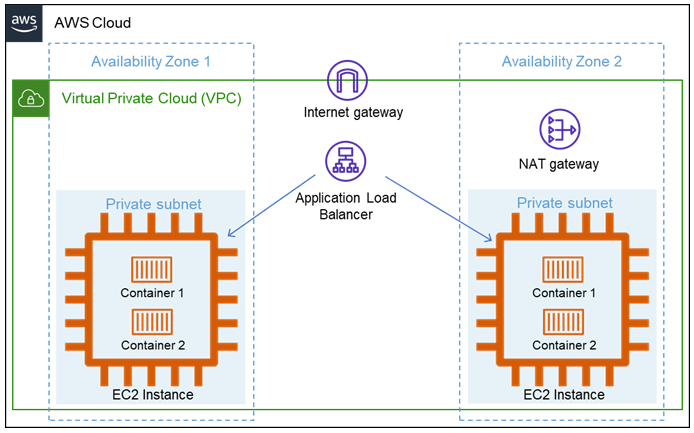

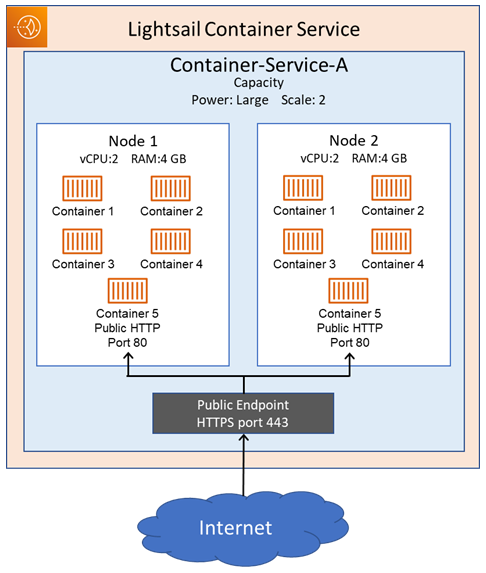

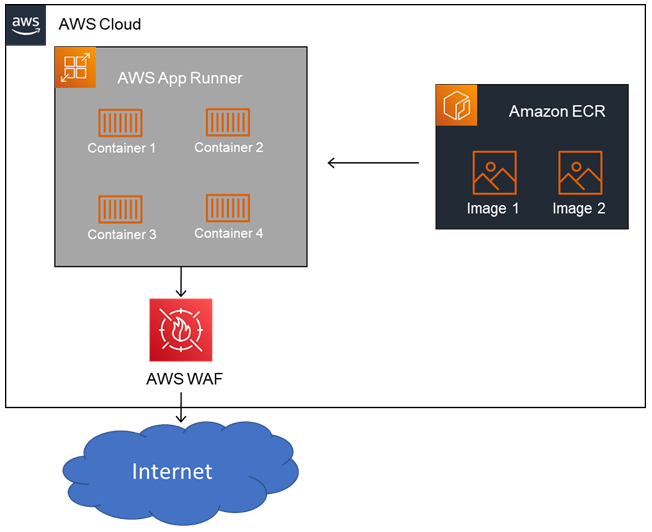

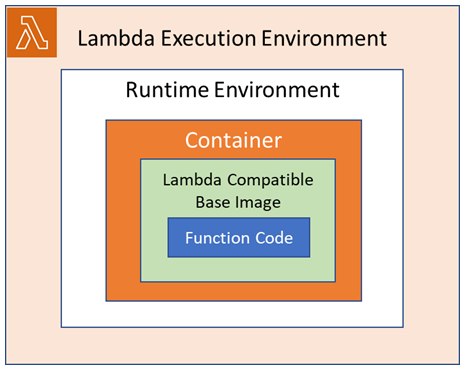

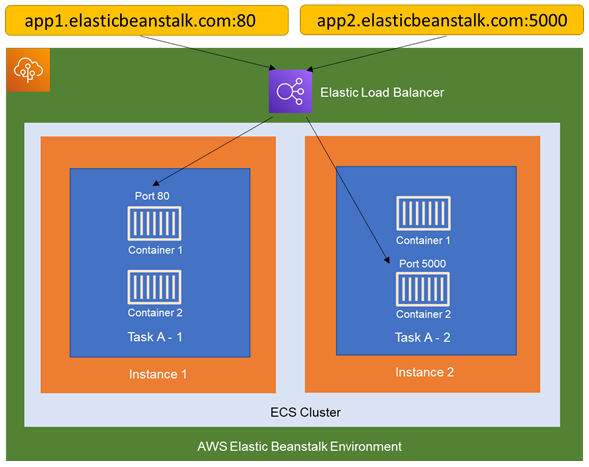

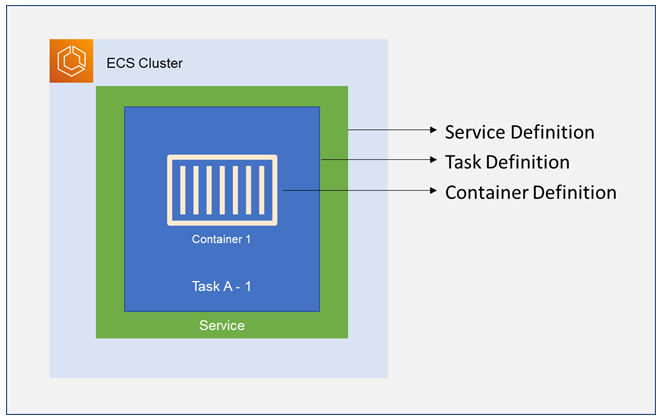

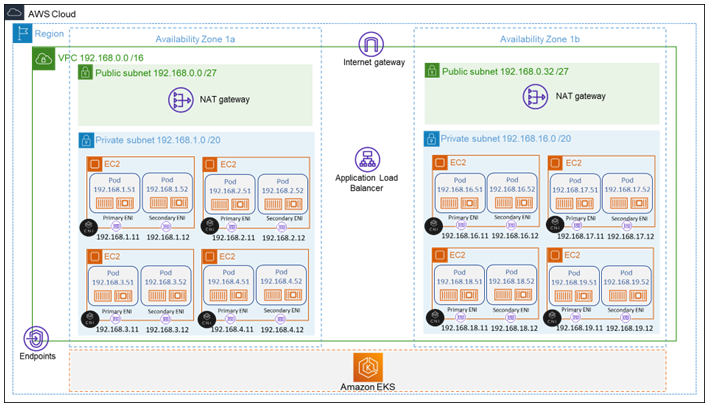

In the above architecture AI agents can be easily developed on Amazon Bedrock with multi-agent collaboration feature. The industrial knowledge base for the Agents can be deployed on cost-effective Amazon Aurora PostgreSQL database with vector database addon. Live data from the factory floor SCADA systems can be ingested into AWS IoT SiteWise and this data can be read and interpreted by Agents along with the Knowledge Base. Plant Engineers through their mobile apps can interact with AI Agents interfaced through Lambda functions and API Gateway.

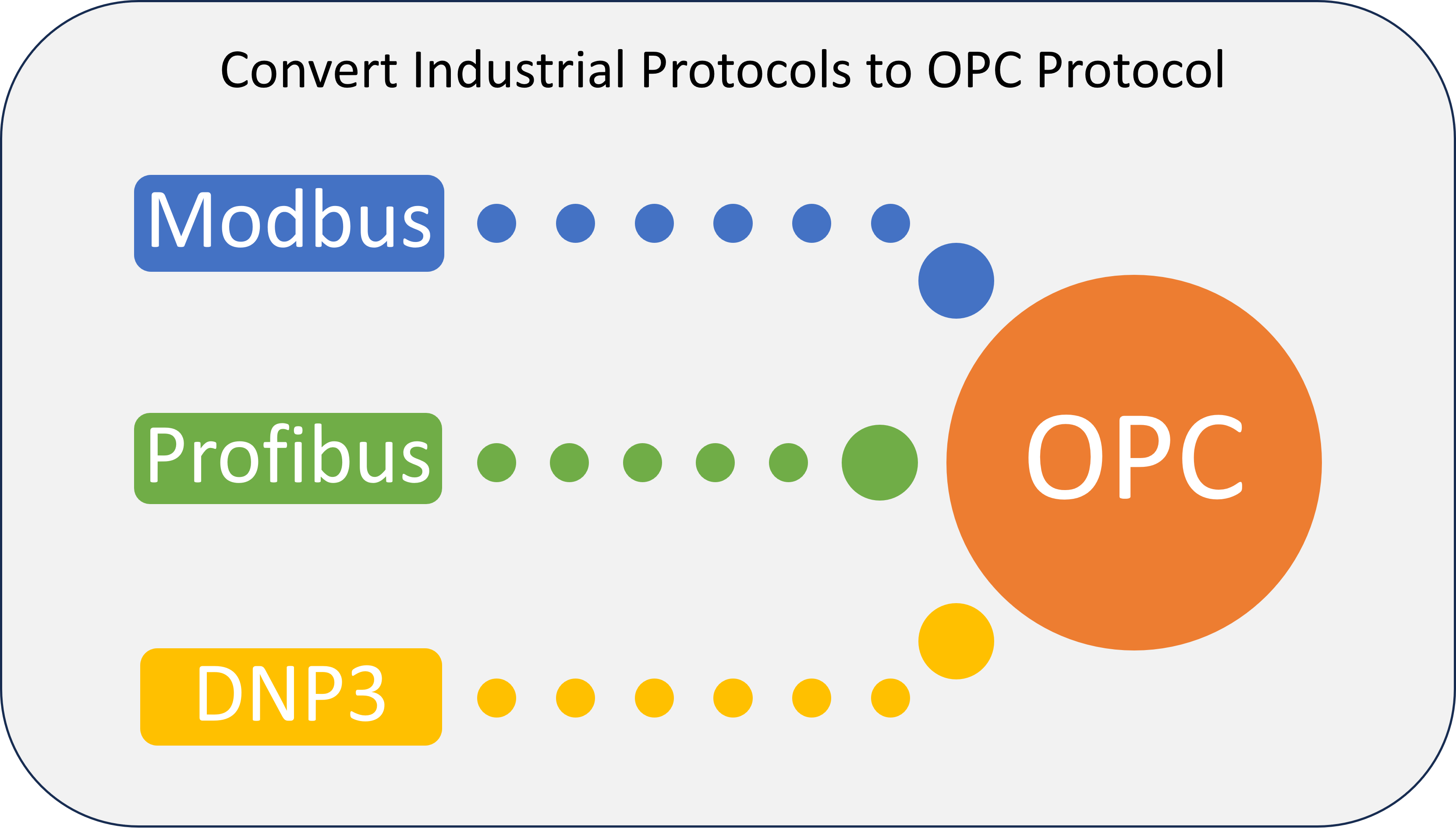

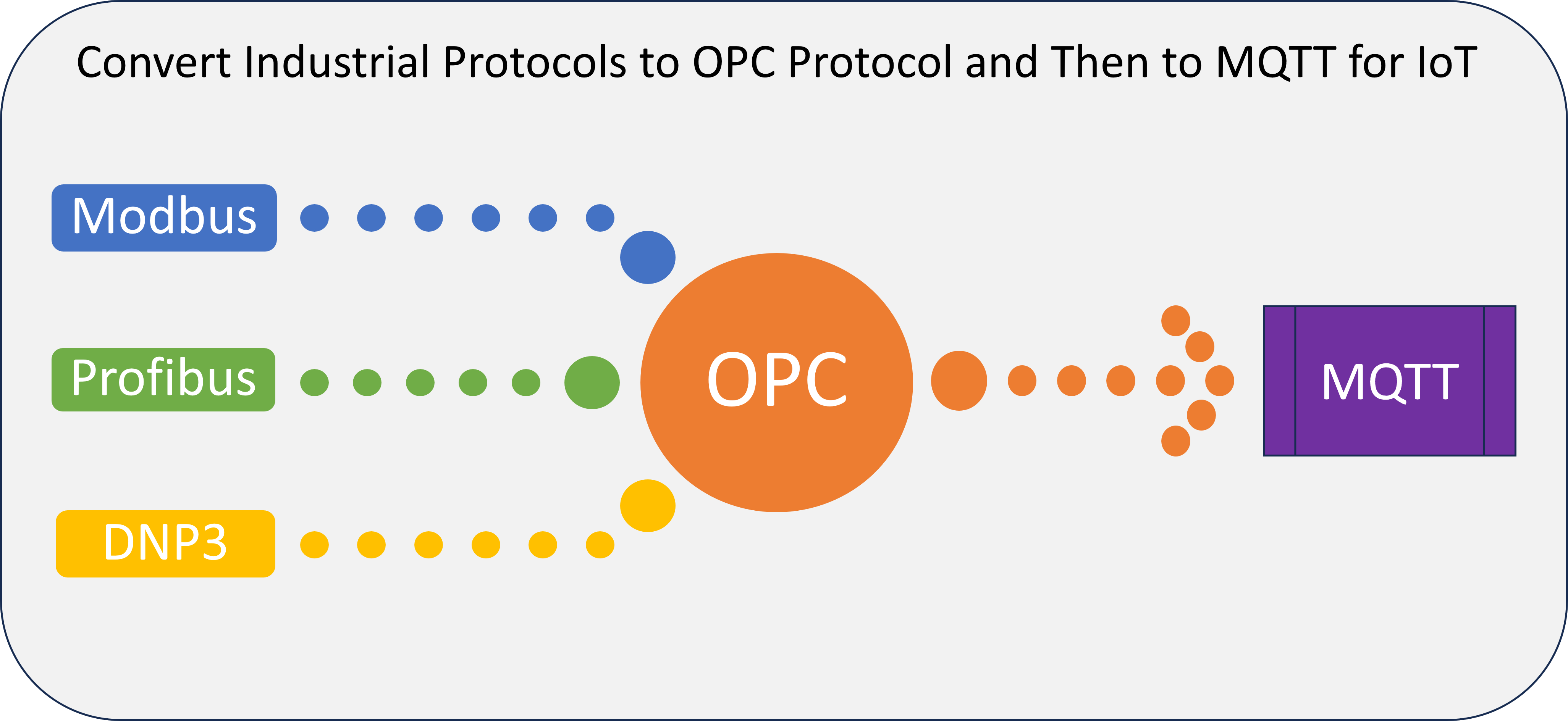

In my previous post I have discussed about understanding industrial protocols in the perspective of IoT and Cloud where you can find more information on integrating live factory data to cloud.

In this repo I have demonstrated integrating Gen AI with IoT with an use case of wind energy, here you can find code for lambda functions, instructions for creating Gen AI Agent and configuring a simulation OPC server with Kepware.

In summary, the integration of Generative AI and IoT technologies in breakdown maintenance not only enhances the efficiency of troubleshooting but also ensures the safety of personnel and equipment. This innovative approach can revolutionize the way industries handle maintenance, leading to significant time and cost savings.