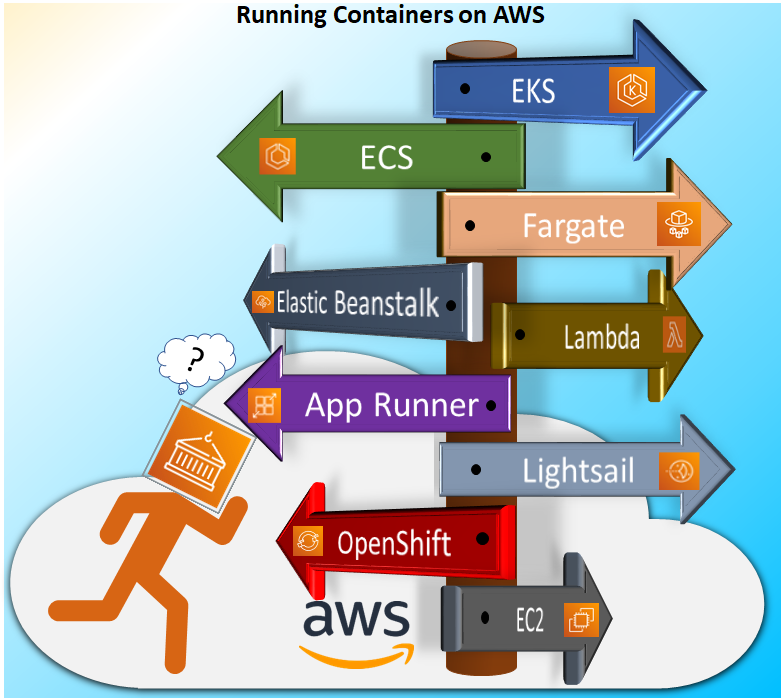

Running Containers on AWS as per Business Requirements and Capabilities

We can run containers with EKS, ECS, Fargate, Lambda, App Runner, Lightsail, OpenShift or on just EC2 instances on AWS Cloud. In this post I will discuss on how to choose the AWS service based on our organization requirements and capabilities.

In-Short

CaveatWisdom

Caveat: Meeting the business objectives and goals can become difficult if we don’t choose the right service based on our requirements and capabilities.

Wisdom:

- Understand the complexity of your application based on how many microservices and how they interact with each other.

- Estimate how your application scales based on business.

- Analyse the skillset and capabilities of your team and how much time you can spend for administration and learning.

- Understand the policies and priorities of your organization in the long-term.

In-Detail

You may wonder why we have many services for running the containers on AWS. One size does not fit all. We need to understand our business goals and requirements and our team capabilities before choosing a service.

Let us understand each service one by one.

All the services which are discussed below require the knowledge of building containerized images with Docker and running them.

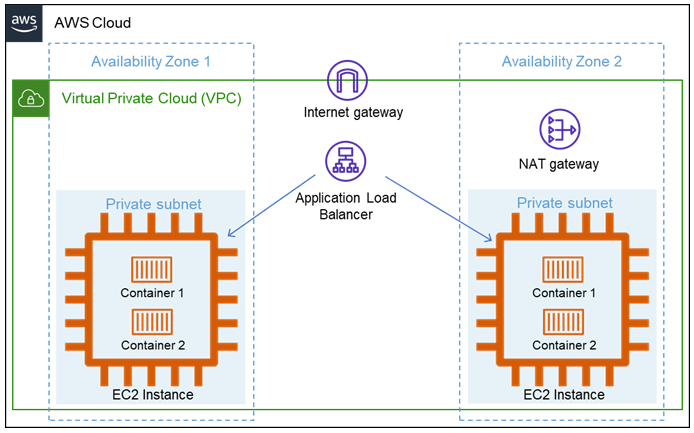

Running Containers on Amazon EC2 Manually

You can deploy and run containers on EC2 Instances manually if you have just 1 to 4 applications like a website or any processing application without any scaling requirements.

Organization Objectives:

- Run just 1 to 4 applications on the cloud with high availability.

- Have full control at the OS level.

- Have standard workload all the time without any scaling requirements.

Capabilities Required:

- Team should have full understanding of AWS networking at VPC level including load balancers.

- Configure and run container runtime like docker daemon.

- Deploying application containers manually on the EC2 instances by accessing through SSH.

- Knowledge of maintaining OS on EC2 instances.

The cost is predictable if there is no scaling requirement.

The disadvantages in this option are:

- We need to maintain the OS and docker updated manually.

- We need to constantly monitor the health of running containers manually.

What if you don’t want to take the headache of managing EC2 instances and monitoring the health of your containers? – Enter Amazon Lightsail

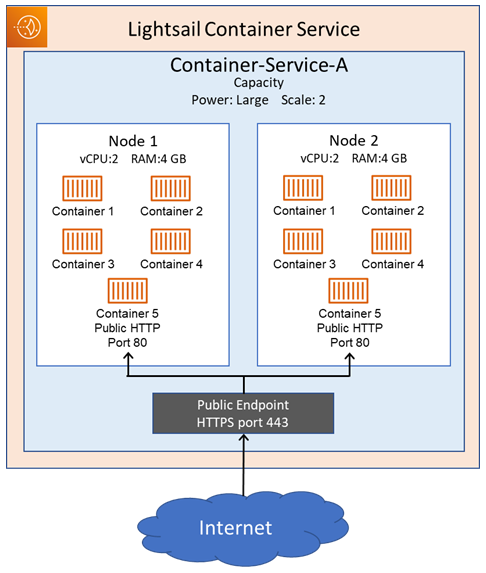

Running Containers with Amazon Lightsail

The easiest way to run containers is Amazon Lightsail. To run containers on Lightsail we just need to define the power of the node (EC2 instance) required and scale that is how many nodes. If the number of containers instances is more than 1, then Lightsail copies the container across multiple nodes you specify. Lightsail uses ECS under the hood. Lightsail manages the networking.

Organization Objectives:

- Run multiple applications on the cloud with high availability.

- Infrastructure should be fully managed by AWS with no maintenance.

- Have standard workload and scale dynamically when there is need.

- Minimal and predictable cost with bundled services including load balancer and CDN.

Capabilities Required:

- Team should have just knowledge of running containers.

Lightsail can dynamically scale but it should be managed manually, we cannot implement autoscaling based on certain triggers like increase in traffic etc.

What if you need more features like building a CI/CD pipeline, integration with a Web Application Firewall (WAF) at the edge locations? – Enter AWS App Runner

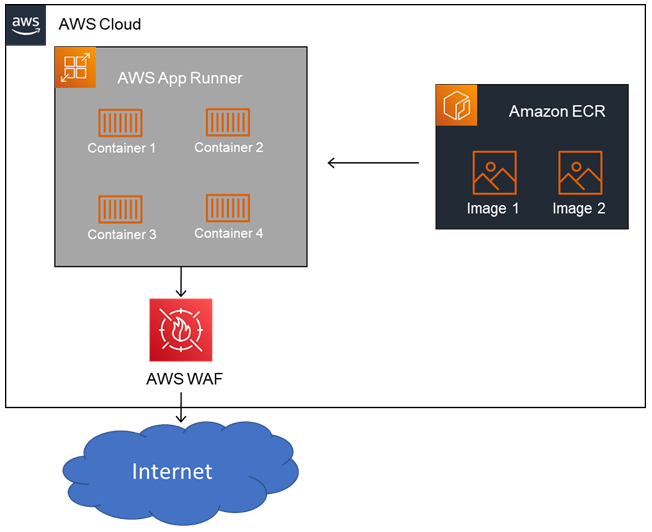

Running Containers with AWS App Runner

AWS App runner is one more easy service to run containers. We can implement Auto Scaling and secure the traffic with AWS WAF and other services like private endpoints in VPC. App Runner directly connects to the image repository and deploy the containers. We can also integrate with other AWS services like Cloud Watch, CloudTrail and X-Ray for advanced monitoring capability.

Organization Objectives:

- Run multiple applications on the cloud with high availability.

- Infrastructure should be fully managed by AWS with no maintenance.

- Auto Scale as per the varying workloads.

- Implement high security features like traffic filtering and isolating workloads in a private secured environment.

Capabilities Required:

- Team should have just knowledge of running containers.

- AWS knowledge of services like WAF, VPC, CloudWatch is required to handle the advanced requirements.

App Runner supports full stack web applications including front-end and backend services. At present App Runner supports only stateless applications, stateful applications are not supported.

What if you need to run the containers in a serverless fashion, i.e., an event driven architecture in which you run the container only when needed (invoked by an event) and pay only for the time the process runs to service the request? – Enter AWS Lambda.

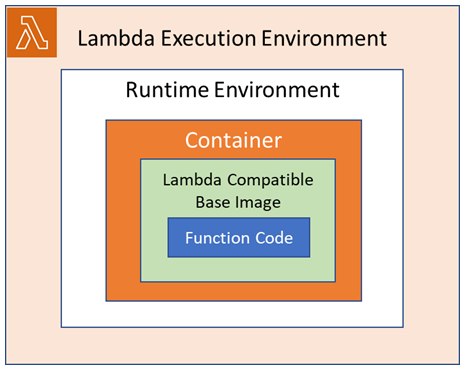

Running Containers with AWS Lambda

With Lambda, you pay only for the time your container function runs in milliseconds and how much RAM you allocate to the function, if your function runs for 300 milliseconds to process a request then you pay only for that time. You need to build your container image with the base image provided by AWS. The base images are open-source made by AWS and they are preloaded with a language runtime and other components required to run a container image on Lambda. If we choose our own base image then we need to add appropriate runtime interface client for our function so that we can receive the invocation events and respond accordingly.

Organization Objectives:

- Run multiple applications on the cloud with high availability.

- Infrastructure should be fully managed by AWS with no maintenance.

- Auto Scale as per the varying workloads.

- Implement high security features like traffic filtering and isolating workloads in a private secured environment.

- Implement event-based architecture.

- Pay only for the requests process without idle time for apps.

- Seamlessly integrate with other services like API Gateway where throttling is needed.

Capabilities Required:

- Team should have just knowledge of running containers.

- Team should have deep understanding of AWS Lambda and event-based architectures on AWS and other AWS services.

- Existing applications may need to be modified to handle the event notifications and integrate with runtime client interfaces provided by the Lambda Base images.

We need to be aware of limitations of Lambda, it is stateless, max time a Lambda function can run is 15 minutes, it provides a temporary storage for buffer operations.

What if you need more transparency i.e., access to underlying infrastructure at the same time the infrastructure is managed by AWS? – Enter AWS Elastic Beanstalk.

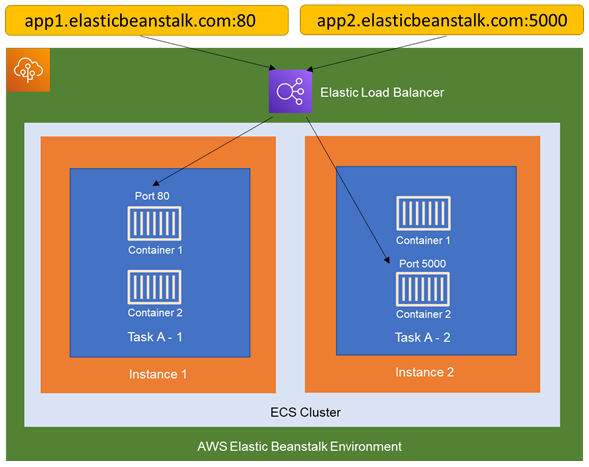

Running Containers with AWS Elastic Beanstalk

We can run any containerized application on AWS Elastic Beanstalk which will deploy and manage the infrastructure on behalf of you. We can create and manage separate environments for development, testing, and production use, and you can deploy any version of your application to any environment. We can do rolling deployments or Blue / Green deployments. Elastic Beanstalk provisions the infrastructure i.e., VPC, EC2 instances, Load Balances with Cloud Formation Templates developed with best practices.

For running containers Elastic Beanstalk uses ECS under-the-hood. ECS provides the cluster running the docker containers, Elastic Beanstalk manages the tasks running on the cluster.

Organization Objectives:

- Run multiple applications on the cloud with high availability.

- Infrastructure should be fully managed by AWS with no maintenance.

- Auto Scale as per the varying workloads.

- Implement high security features like traffic filtering and isolating workloads in a private secured environment.

- Implement multiple environments for developing, staging and productions.

- Deploy with strategies like Blue / Green and Rolling updates.

- Access to the underlying instances.

Capabilities Required:

- Team should have just knowledge of running containers.

- Foundational knowledge of AWS and Elastic Beanstalk is enough.

What if you need to implement more complex microservices architecture with advanced functionality like service mesh and orchestration? Enter Elastic Container Service Directly

Running Containers with Amazon Elastic Container Service (Amazon ECS)

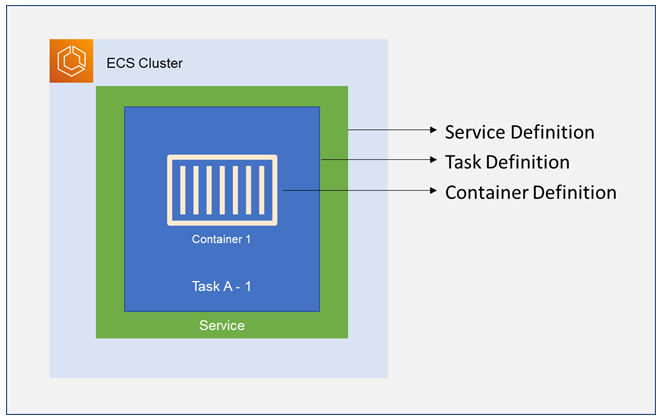

When we want to implement a complex micro-services architecture with orchestration of container, then ECS is the right choice. Amazon ECS is a fully managed service with built-in best practices for operations and configuration. It removes the headache of complexity in managing the control plane and gives option to run our workloads anywhere in cloud and on-premises.

ECS give two launch types to run tasks, Fargate and EC2. Fargate is a serverless option with low overhead with which we can run containers without managing infrastructure. EC2 is suitable for large workloads which require consistently high CPU and memory.

A Task in ECS is a blueprint of our microservice, it can run one or more containers. We can run tasks manually for applications like batch jobs or with a Service Schedular which ensures the scheduling strategy for long running stateless microservices. Service Schedular orchestrates containers across multiple availability zones by default using task placement strategies and constraints.

Organization Objectives:

- Run complex microservices architecture with high availability and scalability.

- Orchestrate the containers as per complex business requirements.

- Integrate with AWS services seamlessly.

- Low learning curve for the team which can take advantage of cloud.

- Infrastructure should be fully managed by AWS with no maintenance.

- Auto Scale as per the varying workloads.

- Implement high security features like traffic filtering and isolating workloads in a private secured environment.

- Implement complex DevOps strategies with managed services for CI/CD pipelines.

- Access to the underlying instances for some applications and at the same time have a serverless option for some other workloads.

- Implement service mesh for microservices with a managed service like App Mesh.

Capabilities Required:

- Team should have knowledge of running containers.

- Intermediate level of understanding of AWS services is required.

- Good knowledge of ECS orchestration and scheduling configuration will add much value.

- Optionally Developers should have knowledge of services mesh implementation with App mesh if it is required.

What if you need to migrate existing on-premises container workloads running on Kubernetes to the Cloud or what if the organization policy states to adopt open-source technologies? – Enter Amazon Elastic Kubernetes Service.

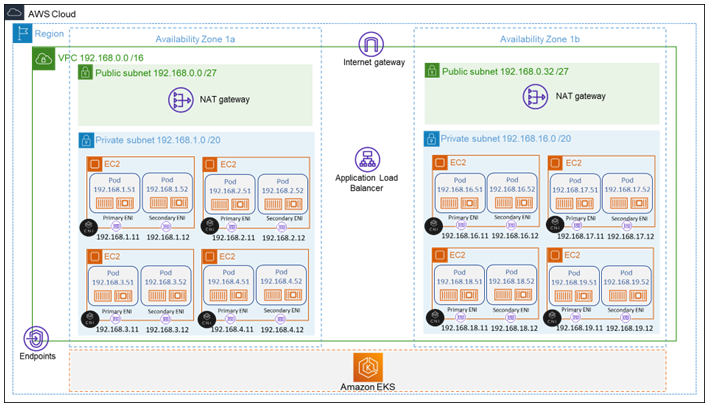

Running Containers with Amazon Elastic Kubernetes Service (Amazon EKS)

Amazon EKS is a fully managed service for Kubernetes control plane and it gives option to run workloads on self-managed EC2 instances, Managed EC2 Instances or fully managed serverless Fargate service. It removes the headache of managing and configuring the Kubernetes Control Plane with in-built high availability and scalability. EKS is an upstream implementation of CNCF released Kubernetes version, so all the workloads presently running on-premises K8S will work on EKS. It gives option to extend and use the same EKS console to on-premises with EKS anywhere.

Organization Objectives:

- Adopt open-source technologies as a policy.

- Migrate existing workloads on Kubernetes.

- Run complex microservices architecture with high availability and scalability.

- Orchestrate the containers as per complex business requirements.

- Integrate with AWS services seamlessly.

- Infrastructure should be fully managed by AWS with no maintenance.

- Auto Scale as per the varying workloads.

- Implement high security features like traffic filtering and isolating workloads in a private secured environment.

- Implement complex DevOps strategies with managed services for CI/CD pipelines.

- Access to the underlying instances for some applications and at the same time have a serverless option for some other workloads.

- Implement service mesh for microservices with a managed service like App Mesh.

Capabilities Required:

- Team should have knowledge of running containers.

- Intermediate level of understanding of AWS services is required and deep understanding of networking on AWS for Kubernetes will a lot, you can read my previous blog here.

- Learning curve is high with Kubernetes and should spend sufficient time for learning.

- Good knowledge of EKS orchestration and scheduling configuration.

- Optionally Developers should have knowledge of services mesh implementation with App mesh if it is required.

- Team should have knowledge on handling Kubernetes updates, you can refer to my vlog here.

Running Containers with Red Hat OpenShift Service on AWS (ROSA)

If the Organization manages its existing workloads on Red Hat OpenShift and want to take advantage of AWS Cloud then we can migrate easily to Red Hat OpenShift Service on AWS (ROSA) which is a managed service. We can use ROSA to create Kubernetes clusters using the Red Hat OpenShift APIs and tools, and have access to the full breadth and depth of AWS services. We can also access Red Hat OpenShift licensing, billing, and support all directly through AWS

I have seen many organizations adopt multiple service to run their container workloads on AWS, it is not necessary to stick to one kind of service, in a complex enterprise architecture it is recommended to keep all options open and adopt as the business needs changes.