Understanding Industrial Protocols in the Perspective of IoT and Cloud

In-Short

CaveatWisdom

Caveat: To take advantage of latest technologies like Generative AI on Cloud, data is being ingested from different sources into the Cloud, coming to real-time industrial data, it’s important to understand the nature of data and it’s flow from its source on shop floor of the industry to its destination in the cloud.

Wisdom: To understand the nature of data and its flow, we need to understand the protocols involved at different levels of data flow, like Modbus, Profibus, EtherCAT, DNP3, OPC, MQTT, etc.

In-Detail

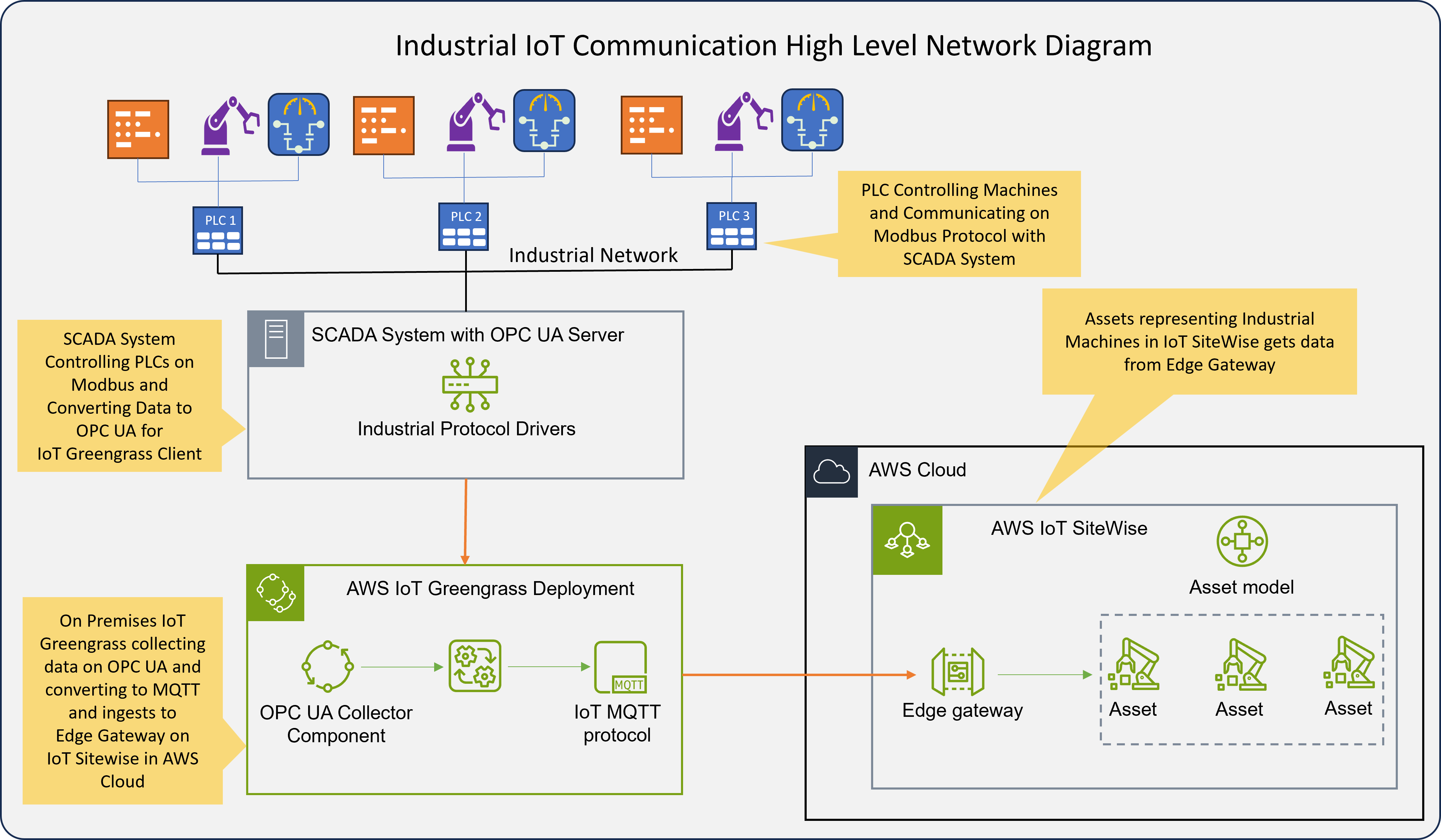

Before we jump into the IoT and Cloud, it’s important to understand the fact that sophisticated industrial automation systems which includes many sensors, instruments, actuators, PLCs, SCADA, etc., existed decades before the advent of Cloud and IoT technologies.

History

Industry 1.0 started with the advent of machine powered by steam engines which replaced the tools powered by human labour. This is during 1760s.

Industry 2.0 started when the machines were powered by electricity which made production more efficient. This is during 1870s.

Industry 3.0 started when machines were controlled by computers (Programmable Logic Controllers – PLCs) and SCADA (Supervisory Control and Data Acquisition) systems. This is during 1970s

Industry 4.0 started with the advent of Cloud and IoT Technologies from year 2011, this enabled analysing huge amounts of industrial data with respect to enterprise data.

Automation in industries is implemented with the help of Sensors, Instruments, PLCs, Actuators, Relays and SCADA systems.

Protocols:

To establish communication between sensors, instruments, PLCs and SCADA systems and also to support their products many major industrial automations companies like Schneider Electric, Siemens, Allen Bradly, GE, Mitsubishi, etc., have developed many industrial protocols like Modbus, Profibus, EtherCAT, DNP3, etc. If we go to any industry like Refineries, Cement Plants, Wind Farms etc., we find automation systems and instruments working on these protocols.

Modbus: Modbus is a data communication protocol that allows devices to communicate with each other over networks and buses. Modbus can be used over serial, TCP/IP, and UDP, and the same protocol can be used regardless of the connection type.

Profibus: is a fieldbus communication standard for industrial automation that allows devices like sensors, controllers, and actuators to share process values. It’s a digital network that connects field sensors to control systems. Profibus is used in many industries, including manufacturing, process industries, and factory automation.

Many of these industrial protocols are synchronous in nature with Client-Server architecture, they are designed to operate within the plant network delivering data on sub-milli second latency for machine operations.

When industrial systems became more and more complex with multiple providers of equipment in a single plant, major industrial automation companies formed an organization called OPC Foundation to define a standard protocol called OPC which can be interoperable between major industrial protocols. Initially OPC DA use to stand for “OLE for Process Control Data Access” based on Microsoft’s OLE (Object Linking and Embedding) technology which was used for communication between applications in the Windows ecosystem. This OPC DA became a legacy protocol (still used in many old industries) and OPC UA has evolved which is interoperable with multiple operating systems, today the acronym OPC stand for “Open Platform Communications” and UA stands for “Unified Architecture”. You can find more information at OPC Foundation.

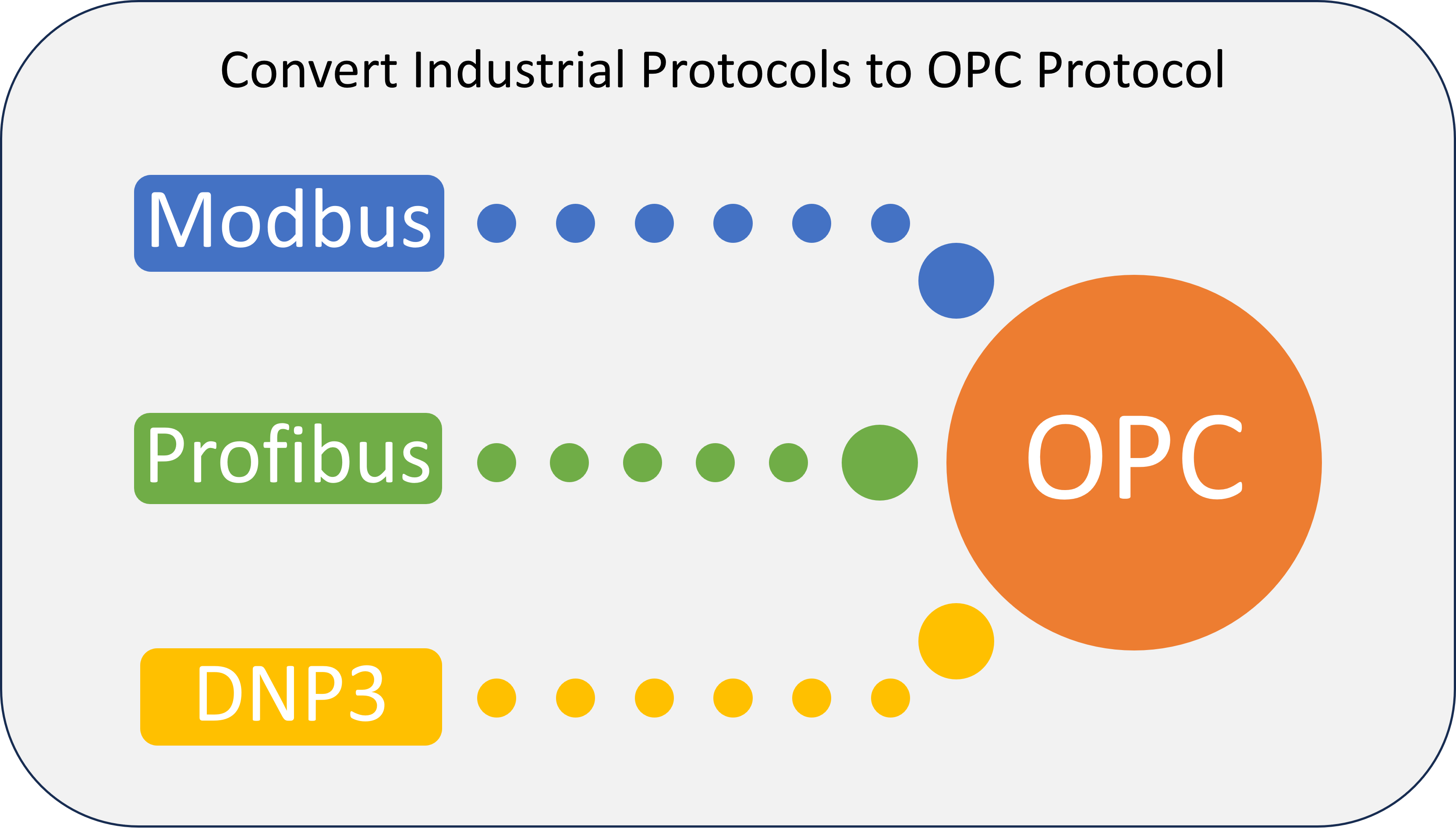

As a first step to ingest data to cloud, basically we need to convert industrial protocols to OPC UA first. We can write a Driver software for that in .Net or Java using the OPC standard from OPC Foundation, or we can use software from companies who have already done it. There are many providers for this OPC software and open-source implementations are also available. Some major providers are Kepware OPC Server and MatrikonOPC.

The OPC servers will poll the data on different devices with Industrial Protocol Drivers and make the data available on OPC for the clients.

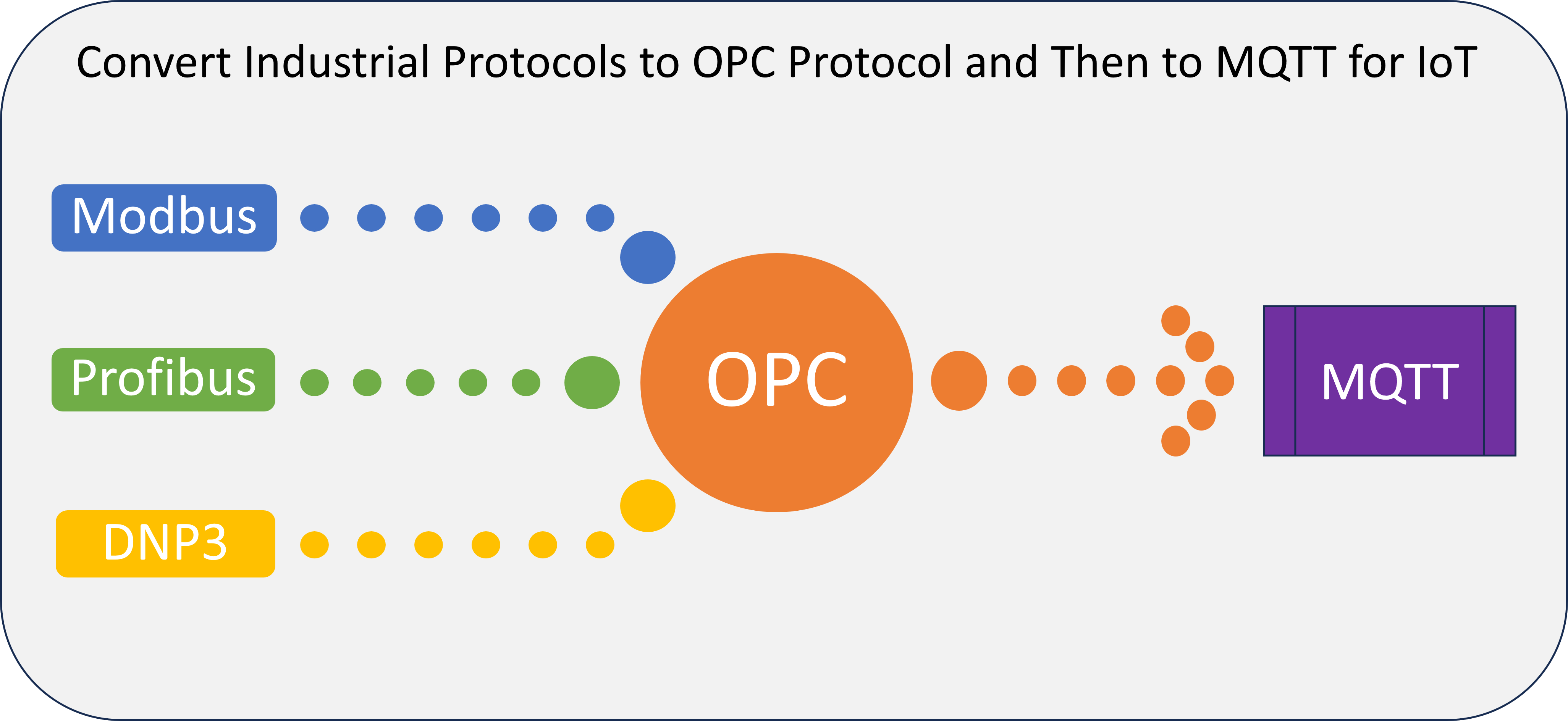

These industrial protocols including OPC are heavier, that is the data packet size is higher and because of the synchronous nature it becomes difficult to have a reliable connection over internet or to send data to a remote server in another geography over long distances, this is the reason for invention of light weight MQTT protocol by Andy Stanford-Clark (IBM) and Arlen Nipper (then working for Eurotech, Inc.) who authored the first version of the protocol in 1999.

Because of MQTT’s light weight nature and pub-sub model, it has been widely adopted for IoT (Internet of Things) after the advent of cloud computing.

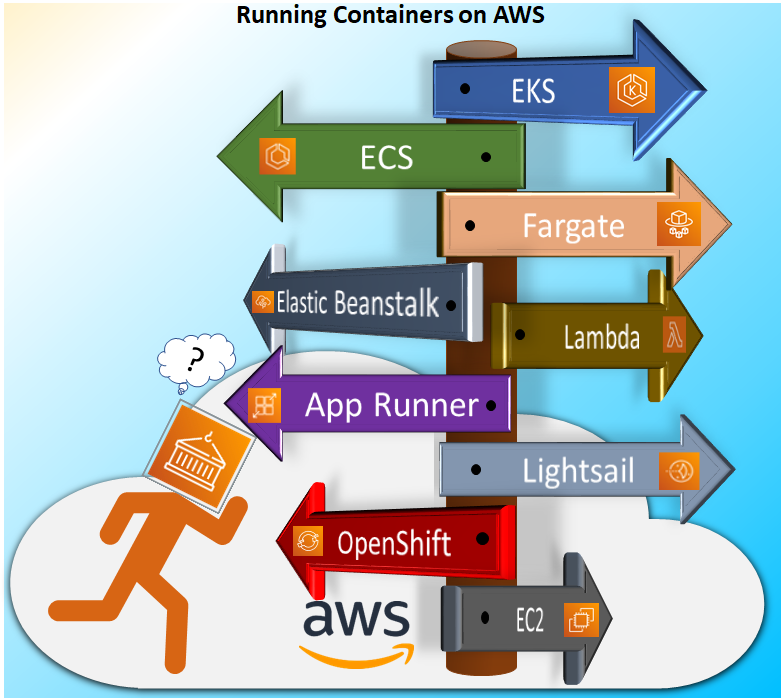

As a second step to ingest data into cloud we must convert OPC to MQTT. IoT SiteWise OPC UA collector does this for us by becoming a client to the OPC server, subscribing or polling the data from OPC server and then converting it to MQTT. IoT SiteWise OPC UA collector is a component of AWS IoT Greengrass which is an edge runtime helping to build, deploy and manage IoT applications on the devices.

Important Points to Note:

Data is transitioning from Synchronous industrial protocols to Asynchronous IoT protocol, due to which there could be latency for the real-time data in the cloud.

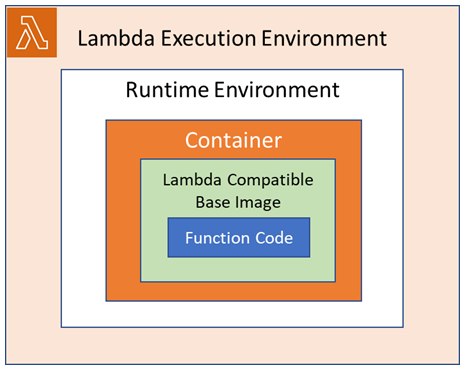

- Key decisions to control the machine should be done at the factory level this can be achieved by running Lambda functions on IoT Greengrass.

- Important data processing for tasks such as Predictive Preventive Maintenance can be done with ML Inference components in IoT Greengrass.

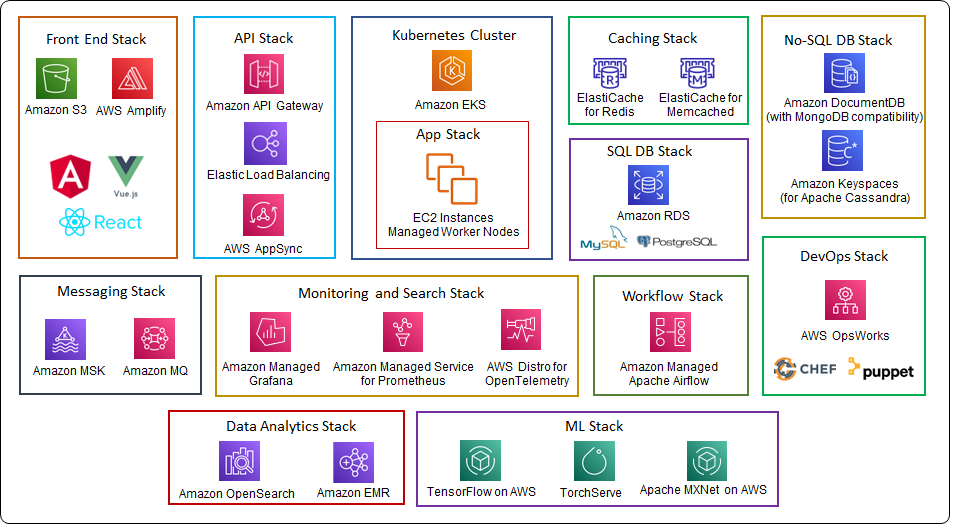

- Post data ingestion to cloud, tasks like Analytics, Visualization and integrations with other services like Generative AI Apps, SAP systems can be done in the Cloud.