Best Practices in Implementing Security Groups for Web Application on AWS

In-Short

CaveatWisdom

Caveat: Its easy to assign source as large VPC wide CIDR range (ex: 10.0.0.0/16) in Security Groups for private instances and avoid painful debugging of data flow however we are opening our systems to a plethora of security vulnerabilities. For example, a compromised system in the network can affect all other systems in the network.

Wisdom:

- Create and maintain separate private subnets for each tier of the application.

- Only allow the required traffic for instances, you can do this easily by assigning “Previous Tier Security Group” as the source (from where the traffic is allowed) in the in-bound rule of the “Present tier’s Security Group”.

- Keep Web Servers as private and always front them with a managed External Elastic Load Balancer.

- Access the servers through Session Manager in the System Manager Server.

In-Detail

Some Basics

Security Group is an Instance level firewall where we can apply allow rules for in-bound and out-bound traffic. In-fact security groups associate with Elastic Network Interfaces (ENIs) of the EC2 instances through which data flows.

We can apply multiple security groups for a instance, all the rules from all security groups associated with instance will be aggregated and applied.

Connection Tracking

Security Groups are stateful, that means when a request is allowed in Inbound rules, corresponding response is automatically allowed and no need to apply outbound rules explicitly. This is achieved by tracking the connection state of the traffic to and from the instance.

It is to be noted that connections can be throttled if traffic increases beyond max number of connections. If all traffic is allowed for all ports (0.0.0.0/0 or ::/0) for both in-bound and out-bound traffic then that traffic is not tracked.

Scenario

Let’s take a three-tier web application where the front end or API receiving the traffic from users will be the Web tier, application logic API lies at App tier and Database in the third tier.

Directly exposing the web servers to the open internet is a big vulnerability, it is always better to keep them in a private subnet and front them with a Load Balancer in a public subnet.

It is better to maintain separate private subnets for each tier with their own auto scaling groups.

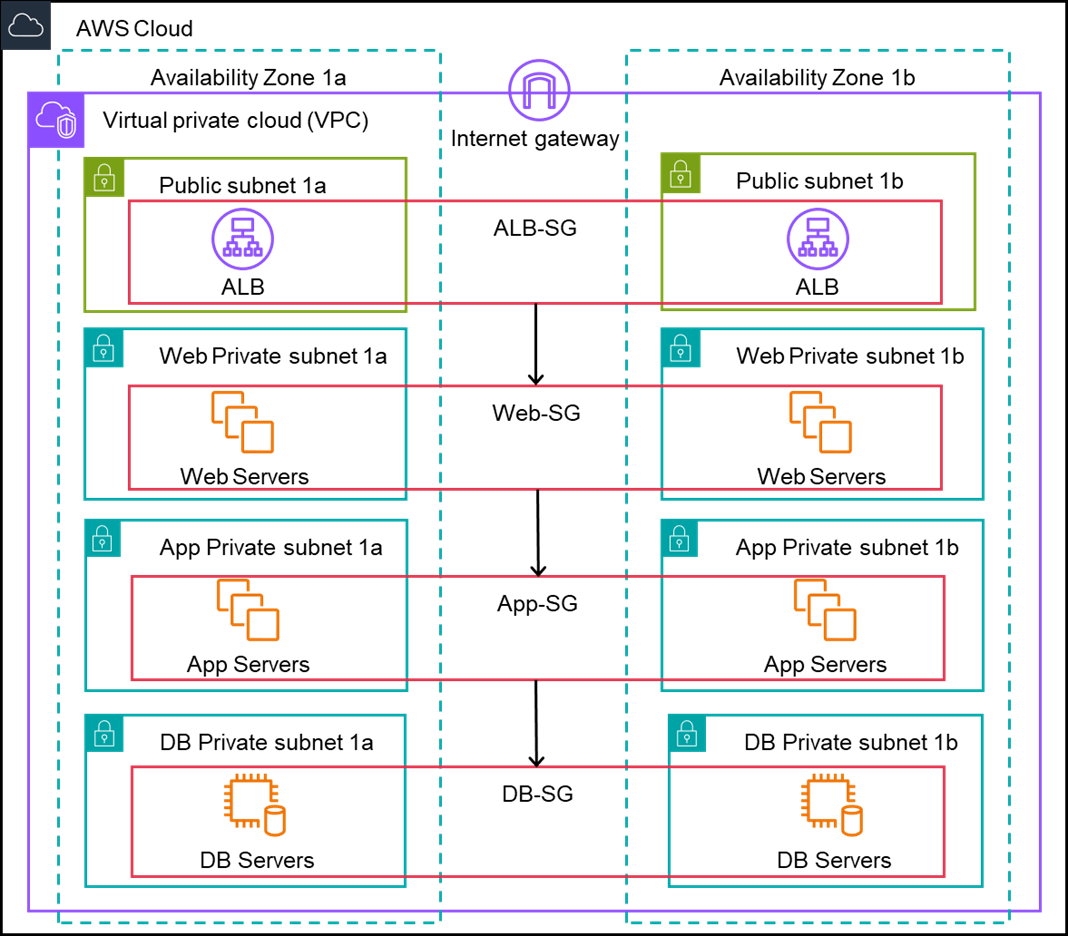

Overall, we can have one public subnet and three private subnets in each availability zone where we host the application. It is recommended to use at least two availability zones for high availability.

The architecture for our three-tier web application can be as below.

Architecture for 3-tier Web Application

Chaining Security Groups

In the above architecture Security Groups are chained from one tier to the next tier. We need to create a separate security group for each tier and a security group for load balancer in the public subnet. For Application load balancer, we need to select at least 2 subnets, 1 in each availability zone.

Implementing Chaining of Security Groups

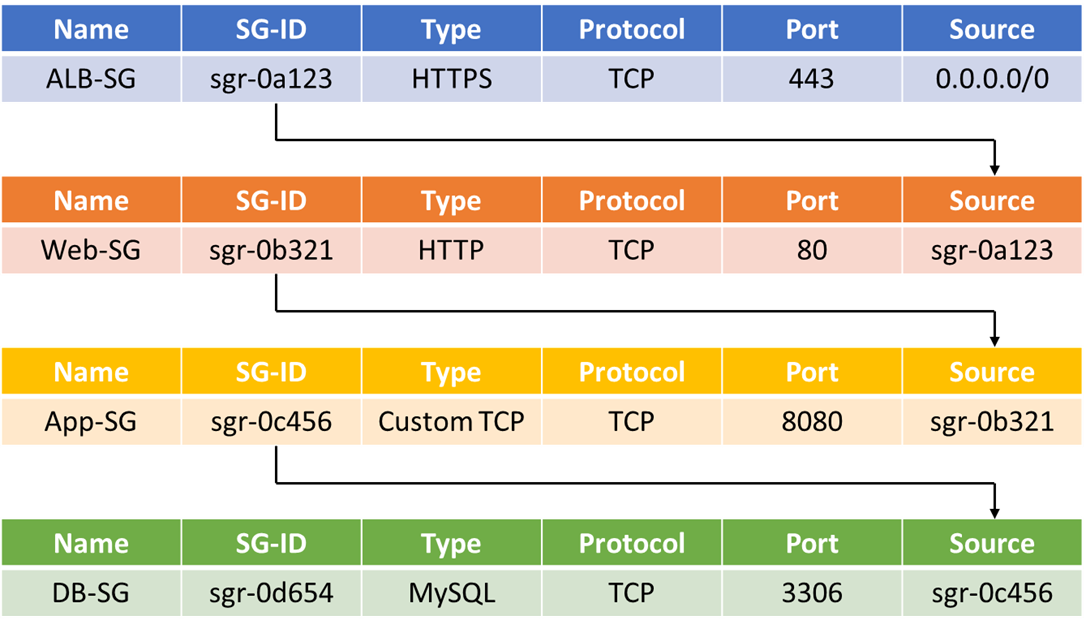

- A Security Group ALB-SG for an External Application Load Balancer should be created with source open to internet (0.0.0.0/0) in the Inbound rule for all the traffic on HTTPS Port 443. TLS/SSL can be terminated at the ALB which can take the heavy lifting of encryption and decryption. An ID for the ALB-SG will be created automatically let’s say sgr-0a123.

- For Web tier a Security Group Web-SG with the source as ALB-SG sgr-0a123 in the Inbound rule on HTTP port 80 should be created. With this rule only connections from ALB are allowed to web servers. Let the ID created for Web-SG be sgr-0b321.

- For App tier a Security Group APP-SG with the source as Web-SG sgr-0b321 in the Inbound rule on Custom port 8080 should be created. With this rule only connections from Instances with Web-SG security group are allowed to App servers. Let the ID created for App-SG be sgr-0c456.

- For Database tier a Security Group DB-SG with the source as App-SG sgr-0c456 in the Inbound rule on MySQL/Aurora port 3306 should be created. With this rule only connections from Instances with App-SG security group are allowed to Database servers. Let the ID created for DB-SG be sgr-0d654.