Basics of AWS Cloud Formation Template

Learn the Anatomy of AWS CloudFormation Template

Learn the Anatomy of AWS CloudFormation Template

Learn about basics of networking in AWS Cloud with Amazon VPC

In-Short

CaveatWisdom

Caveat: Developing Solutions with Open-Source technologies gives us freedom from licensing and also run them anywhere we want, however it becomes increasingly complex and difficult to scale and manage at high velocities with Open Source.

Wisdom:

We easily can offload the management and scalability to the managed services in the cloud and concentrate more on our required business functionality. This can also save total cost of ownership (TCO) in the long term.

In-Detail

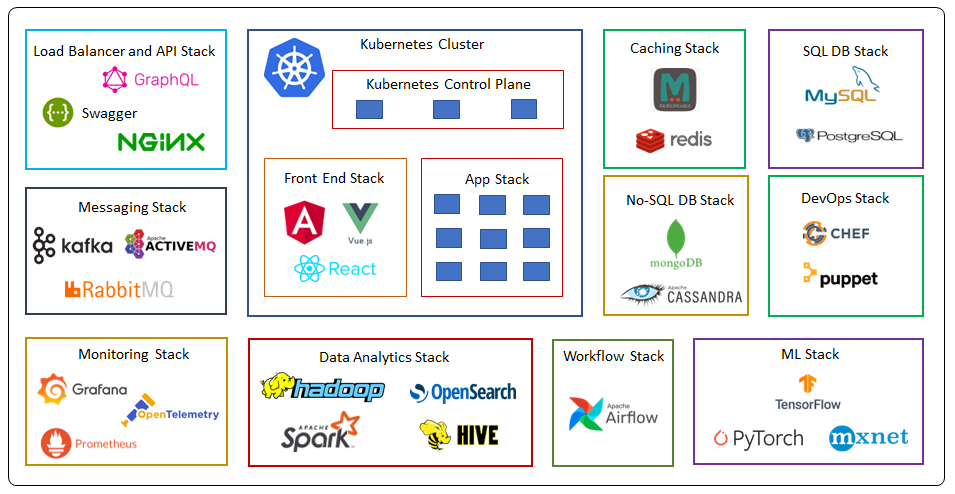

Let’s consider an On-premises solution stack which we would like to migrate to cloud for gaining scalability and high availability.

Application Stack

Legacy application stack hosted on the VMs in the On-Prem data centre can be easily moved to Amazon EC2 instances with lift and shift operations.

Kubernetes Cluster

Now a days many organizations are using Kubernetes to orchestrate their containerised microservices. In Kubernetes installation and management of control plane nodes and etcd database cluster with high availability and scalability becomes a challenging task. Added to it we also need to manage worker nodes on data plane where we run our applications.

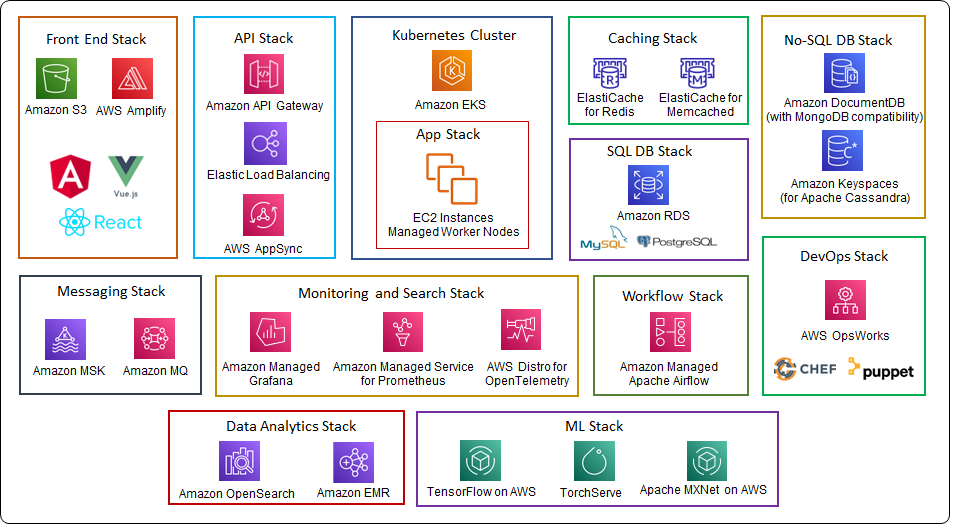

We can easily migrate our Kubernetes workloads to Amazon Elastic Kubernetes Service (EKS) which completely manages the controls plane and etcd cluster in it with high availability and reliability. Amazon EKS gives us three options with worker nodes, Managed Worker Nodes, Un-Managed Worker Nodes and Fargate which is a fully managed serverless option for running containers with Amazon EKS. It is a certified to CNCF Kubernetes software conformance standards, so we need not to worry about any portability issues. You can refer to my previous blog post on Planning and Managing Amazon VPC IP space in Amazon EKS cluster for best practices.

Amazon EKS Anywhere can handle Kubernetes clusters on your on-premises also if you wish to keep some workloads on your on-premises.

Front-End Stack

If we have front-end stack developed with Angular, React, Vue or any other static web sites then we can take advantage of web hosting capabilities of Amazon S3 which is fully managed object storage service.

With AWS Amplify we can host both static and dynamic full stack web apps and also implement CI/CD pipelines for any new web or mobile projects.

Load balancer and API stack

We can use Amazon Elastic Load Balancer for handling the traffic, Application Load balancer can do content based routing to the microservices on the Amazon EKS cluster, this can be easily implemented with ingress controller provided by AWS for Kubernetes.

If we have REST API stack developed with OpenAPI Specification (Swagger), we can easily import the swagger files in Amazon API gateway and implement the API stack.

If we have GraphQL API stack we can implement it with managed serverless service AWS AppSync, which support any back-end data store on AWS like DynamoDB.

If we have Nginx load balancers on on-premises, we can take them on to Amazon Lightsail instances.

Messaging and Streaming Stack

It is important to decouple the applications with the messaging layer, in microservices architecture generally messaging layer is implemented with popular open source tech like Kafka, Apache Active MQ and Rabbit MQ. We can shift these workloads to managed service on AWS which are Amazon Managed Streaming for Apache Kafka (Amazon MSK) and Amazon MQ.

Amazon MSK is a fully managed service for Apache Kafka to process the streaming data. It manages the control-plane operations for creating, updating and deleting clusters and we can use the data-plane for producing and consuming the streaming data. Amazon MSK also creates the Apache ZooKeeper nodes which is an open-source server that makes distributed coordination easy. MSK serverless is also available in some regions with which you need not worry about cluster capacity.

Amazon MQ which is a is a managed message broker service supports both Apache ActiveMQ and RabbitMQ. You can migrate existing message brokers that rely on compatibility with APIs such as JMS or protocols such as AMQP 0-9-1, AMQP 1.0, MQTT, OpenWire, and STOMP.

Monitoring Stack

Well known tools for monitoring the IT resources and application are OpenTelemetry, Prometheus and Grafana. In many cases OpenTelemetry agents collects the metrics and distributed traces data from containers and application and pumps it to the Prometheus server and can be visualized and analysed with Grafana.

AWS Distro for OpenTelemetry consists of SDKs, auto-instrumentation agents, collectors and exporters to send data to back-end services, it is an upstream-first model which means AWS commits enhancements to the CNCF (Cloud Native Computing Foundation Project) and then builds the Distro from the upstream. AWS Distro for OpenTelemetry supports Java, .NET, JavaScript, Go, and Python. You can download AWS Distro for OpenTelemetry and implement it as a daemon in the Kubernetes cluster. It also supports sending the metrics to popular Amazon CloudWatch.

Amazon Managed Service for Prometheus is a serverless, Prometheus-compatible monitoring service for container metrics which means you can use the same open-source Prometheus data model and query language that you are using on the on-premises for monitoring your containers on Kubernetes. You can integrate AWS Security with it securing your data. As it is a managed service high availability and scalability are built into the service. Amazon Managed Service for Prometheus is charged based on metrics ingested and metrics storage per GB per month and query samples processed, so you only pay for what you use.

With Amazon Managed Grafana you can query and visualize metrics, logs and traces from multiple sources including Prometheus. It’s integrated with data sources like Amazon CloudWatch, Amazon OpenSearch Service, AWS X-Ray, AWS IoT SiteWise, Amazon Timestream so that you can have a central place for all your metrics and logs. You can control who can have access to Grafana with IAM Identity Center. You can also upgrade to Grafana Enterprise which gives you direct support from Grafana Labs.

SQL DB Stack

Most organizations certainly have SQL DB stack and many prefer to go with the opensource databases like MySQL and PostgreSQL. We can easily move these database workloads to Amazon Relation Database service (Amazon RDS) which support six database engines MySQL, PostgreSQL, MariaDB, MS SQL Server, Oracle and Aurora. Amazon RDS manages all common database administration tasks like backups and maintenance, it gives us the option for high availability with multi-AZ which is just a switch, once enabled, Amazon RDS automatically manages the replication and failover to a stand by database instance in another availability zone. With Amazon RDS we can create read replicas for read-heavy workloads. It makes sense to shift MySQL and PostgreSQL workloads to Amazon Aurora if we have compatible supported versions by Aurora with little or no change, because Amazon Aurora give 5x more throughput for MySQL and 3X more throughput for PostgreSQL than the standard ones as it takes advantage of cloud clustered volume storage. Aurora can support scaling up to 128TB storage.

If you have SQL Server workloads and op to go for Aurora PostgreSQL, then Babelfish can help to accept connections from your SQL Server clients.

We can easily migrate your database workload with AWS Database Migration Service and Schema Conversion tool.

No-SQL DB Stack

If we have No-SQL DB stack like MongoDB and Cassandra on our on-prem data center the we can choose to run our workload on Amazon DocumentDB (with MongoDB compatibility) and Amazon Keyspaces (for Apache Cassandra). Amazon DocumentDB (with MongoDB compatibility) is a fully managed database service which can grow the size of storage volume as the database storage grows. The storage volume grows in increments of 10 GB, up to a maximum of 64 TB. We can also create up to 15 read replicas in other availability zones for high read throughput. You may also consider MongoDB Atlas on AWS service from AWS marketplace which is developed and supported by MongoDB.

You can use same Cassandra application code and developer tools on Amazon Keyspaces (for Apache Cassandra) which gives high availability and scalability. Amazon Keyspaces is serverless, so you pay for only the resources that you use, and the service automatically scales tables up and down in response to application traffic.

Caching Stack

We use in-memory data store for very low latency applications for caching. Amazon ElastiCache supports the Memcached and Redis cache engines. We can go for Memcached if we need simple model and multithreaded performance. We can choose Redis if we require high availability with replication in another availability zone and other advanced features like pub/sub and complex data types. With Redis you go for Cluster mode enabled or disabled. ElastiCache for Redis manages backups, software patching, automatic failure detection, and recovery.

DevOps Stack

For DevOps and configuration management we could be using Chef Automate or Puppet Enterprise, when we shift to the cloud, we can use the same stack to configure and deploy our applications with AWS OpsWorks, which is a configuration management service that creates fully-managed Puppet Enterprise servers and Chef Automate servers.

With OpsWorks for Puppet Enterprise we can configure, deploy and manage EC2-instances and as well as on-premises servers. It gives full-stack automation for OS configurations, update and install packages and change management.

You can run your cookbooks which contain recipes on OpsWorks for Chef Automate to mange infra and applications on the EC2 instances.

Data Analytics Stack

OpenSearch is an open-source search and analytics suite made from a fork of ALv2 version of Elasticsearch and Kibana (Last open-source version of Elasticsearch and Kibana). If you have workloads developed on opensource version of Elasticserach or OpenSearch then you can easily migrate to Amazon OpenSearch which is a managed service. AWS has added several new features for OpenSearch such as support for cross-cluster replication, trace analytics, data streams, transforms, a new observability user interface, and notebooks in OpenSearch Dashboards.

Big data frameworks like Apache Hadoop and Apache Spark can be ported to Amazon EMR. We can process petabyte-scale of data from multiple data stores like Amazon S3 and Amazon DynamoDB using open-source frameworks such as Apache Spark, Apache Hive, and Presto. We can create an Amazon EMR cluster which is collections of managed EC2 instances on which EMR installs different software components, each EC2 instance become a node in the Apache Hadoop framework. Amazon EMR Serverless is a new option which makes it easy and cost-effective for data engineers and analysts to run applications without managing clusters.

Workflow Stack

For Work flow management orchestration, we can port the Apache Airflow to Amazon MWAA which is a managed service for Apache Airflow. As usual in the cloud we gain scalability and high availability without the headache of maintenance.

ML Stack

Apart from the AWS native ML tools in Amazon SageMaker, AWS supports many opensource tools like MXNet, TensorFlow and PyTorch with managed services and Deep learning AMIs.

Open-Source Technology Stack on AWS Cloud

There are many great tools out there for building CI/CD pipelines on AWS Cloud, for the sake of simplicity I am limiting my discussion to AWS native tools.

In-Short

CaveatWisdom

Caveat: Achieving Speed, Scale and Agility is important for any business however it should not be at the expense of Security.

Wisdom: Security should be implemented by design in a CI/CD pipeline and not as an afterthought.

Security Of the CI/CD Pipeline: It is about defining who can access the pipeline and what they can do. It is also about hardening the build servers and deployment conditions.

Security In the CI/CD Pipeline: It is about static code analysis and validating the artifacts generated in the pipeline.

In-Detail

The challenge when automating the whole Continuous Integration and Continuous Delivery / Deployment (CI/CD) process is implementing the security at scale. This can be achieved in DevSecOps by implementing Security by Design in the pipeline.

Security added as an afterthought

Security by Design

Security by Design will give confidence to deliver at high speed and improve the security posture of the organization. So, we should think of the security from every aspect and at every stage while designing the CI/CD pipeline and implement it while building the Pipeline.

Some Basics

Continuous Integration (CI): It is the process which start with committing the code to the Source control repository and includes building and testing the artifacts.

Continuous Delivery / Deployment (CD): It is the process which extends the CI till deployment. The difference between Delivery and Deployment is, in Delivery there will be a manual intervention phase (approval) before deploying to production and Deployment is fully automated with thorough automated testing and roll back in case of any deployment failure.

DevSecOps AWS Toolset for Infrastructure and Application Pipelines

By following the Security Perspective of AWS Cloud Adoption Framework (CAF) we can build the capabilities in implementing Security of the Pipeline and Security in the Pipeline.

Implementing Security Of the CI/CD Pipeline

While designing security of the pipeline, we need to look at the whole pipeline as a resource apart from its security of individual elements in it. We need to consider the following factors from security perspective

Security Governance

Once we establish what access controls for the pipeline are needed, we can develop security governance capability of pipeline by implementing directive and preventive controls with AWS IAM and AWS Organization Policies.

We need to follow the least privilege principle and give necessary access, for example a read only access to the Pipeline can be given with the following policy

{

"Statement": [

{

"Action": [

"codepipeline:GetPipeline",

"codepipeline:GetPipelineState",

"codepipeline:GetPipelineExecution",

"codepipeline:ListPipelineExecutions",

"codepipeline:ListActionExecutions",

"codepipeline:ListActionTypes",

"codepipeline:ListPipelines",

"codepipeline:ListTagsForResource",

"iam:ListRoles",

"s3:ListAllMyBuckets",

"codecommit:ListRepositories",

"codedeploy:ListApplications",

"lambda:ListFunctions",

"codestar-notifications:ListNotificationRules",

"codestar-notifications:ListEventTypes",

"codestar-notifications:ListTargets"

],

"Effect": "Allow",

"Resource": "arn:aws:codepipeline:us-west-2:123456789111:ExamplePipeline"

},

{

"Action": [

"codepipeline:GetPipeline",

"codepipeline:GetPipelineState",

"codepipeline:GetPipelineExecution",

"codepipeline:ListPipelineExecutions",

"codepipeline:ListActionExecutions",

"codepipeline:ListActionTypes",

"codepipeline:ListPipelines",

"codepipeline:ListTagsForResource",

"iam:ListRoles",

"s3:GetBucketPolicy",

"s3:GetObject",

"s3:ListBucket",

"codecommit:ListBranches",

"codedeploy:GetApplication",

"codedeploy:GetDeploymentGroup",

"codedeploy:ListDeploymentGroups",

"elasticbeanstalk:DescribeApplications",

"elasticbeanstalk:DescribeEnvironments",

"lambda:GetFunctionConfiguration",

"opsworks:DescribeApps",

"opsworks:DescribeLayers",

"opsworks:DescribeStacks"

],

"Effect": "Allow",

"Resource": "*"

},

{

"Sid": "CodeStarNotificationsReadOnlyAccess",

"Effect": "Allow",

"Action": [

"codestar-notifications:DescribeNotificationRule"

],

"Resource": "*",

"Condition": {

"StringLike": {

"codestar-notifications:NotificationsForResource": "arn:aws:codepipeline:*"

}

}

}

],

"Version": "2012-10-17"

Many such policies can be found here in AWS Documentation.

Usually, Production, Development and Testing environments are isolated with separate AWS Accounts each for maximum security under AWS Organizations and security controls are established with organizational SCP policies.

Threat Detection and Configuration Changes

It is a challenging task to see to that everyone in the organization follows best practices in creating the CI/CD pipelines. We can automate the changes in configuration of pipelines with AWS Config and take the remediation actions by integrating lambda functions.

It is important to audit the Pipeline access with AWS CloudTrail to find the knowledge gap in personal and also find the nefarious activities.

Implementing Security In the CI/CD Pipeline

Let us look at Security in the Pipeline at each stage. Although there could be many stages in the Pipeline, we will consider main stages which are Code, Build, Test and Deploy. If any security test fails, the Pipeline stops, and the code does not move to production

Code Stage

AWS CodePipeline supports various source control services like GitHub, Bitbucket, S3 and CodeCommit. The advantage with CodeCommit and S3 is we can integrate them with AWS IAM security and define who can commit to the code repo and who can merge the pull requests.

We can automate the process of Static Code Analysis with Amazon CodeGuru once the code is pushed to the source control. Amazon CodeGuru uses program analysis and machine learning to detect potential defects that are difficult for developers to find and offers suggestions for improving your Java and Python code.

CodeGuru also detects hardcoded secrets in the code and recommends remediation steps to secure the secrets with AWS Secrets Manager.

Build Stage

Security of the build servers and their vulnerability detection is important when we maintain our own build servers like Jenkins, we can use Amazon GuardDuty for threat detection and Amazon Inspector for vulnerability scan.

We can avoid the headache of maintaining the build server if use fully managed build service AWS CodeBuild.

The artifacts built at the build stage can be stored securely in ECR and S3. Amazon ECR supports automated scanning of images for vulnerabilities.

The code and build artifacts stored on S3, CodeCommit and ECR can be encrypted with AWS KMS keys.

Test Stage

AWS CodePipeline supports various services for test action including AWS CodeBuild, CodeCov, Jenkins, Lambda, DeviceFarm, etc.

You can use AWS CodeBuild for unit testing and compliance testing by integrating test scripts in it and stop the pipeline if the test fails.

Open source vulnerabilities can be challenging, by integrating WhiteSource with CodeBuild we can automate scanning of any source repository in the pipeline.

Consider testing an end-to-end system including networking and communications between the microservices and other components such as databases.

Consider mocking the end points of third-party APIs with lambda.

AMI Automation and Vulnerability Detection

It is recommended to build custom pre-baked AMIs with all the necessary software installed on the AWS Quick Start AMIS as base for faster deployment of instances.

We can automate the creation of AMIs with Systems Manager and restrict the access to launch EC2 instance to only Tagged AMIs while building the pipeline for deploying infrastructure with CloudFormation.

It is important to periodically scan for vulnerabilities on the EC2 instances which are made up of our custom AMIs, Amazon Inspector which is a managed service makes our life easy in finding the vulnerabilities in EC2 instances and ECR images. We integrate the Amazon Inspector in our pipeline with Lambda function and we can also automate the remediation with the help of Systems Manager

Deploy

When we have an isolated production environment in a separate Production account, consider building AWS CodePipeline which can span across multiple accounts and regions also. We just need to give necessary permissions with the help of IAM Roles.

Even the user who control the production environment should consider accessing it with IAM Roles whenever required which can give a secure access with temporary credentials, this helps prevents you from accidentally making changes to the Production environment. This technique of switching roles allows us to apply security best practices that implement the principle of least privilege, only take on ‘elevated’ permission while doing a task that requires them, and then give them up when the task is done.

Implement Multi-Factor Authentication (MFA) while deploying critical applications.

Consider doing canary deployment, that is testing with a small amount of production traffic, which can be easily managed with AWS CodeDeploy.

For the sake of simplicity, I will discuss only IPv4 addressing in this post, I will discuss IPv6 addressing in another blog post.

In-Short

CaveatWisdom

Caveat: Planning Amazon VPC IP space and choosing right EC2 instance type is important for Amazon EKS Cluster, or else, Kubernetes can stop creating or scaling pods for want of IP addresses in the cluster and our applications can stop scaling.

Wisdom:

In-Detail

Some Basics

Amazon VPC is a virtual private network which you create to logically isolate EC2 instances and assign private IP addresses for your EC2 instances within the VPC IP address range you defined. A public subnet is a subnet with a route table that includes a route to an internet gateway, whereas a private subnet is a subnet with a route table that doesn’t include a route to an internet gateway.

Kubernetes is an open-source container orchestration system for automating software deployment, scaling, and management. It creates Pods, each Pod can contain one container or a group of logically related containers. A Pod is like an ephemeral light weight virtual machine which will have its own private IP Address, this Pod’s IP address is assigned by kube-proxy which is a component of Kubernetes.

Amazon EKS is a managed Kubernetes service to run Kubernetes in the AWS cloud, it automatically manages the availability and scalability of the Kubernetes control plane nodes responsible for scheduling containers, managing application availability, storing cluster data, and other key tasks.

Kubernetes support Container Network Interface (CNI) plugins for cluster networking, a suitable CNI plugin is required to implement Kubernetes Networking Model. In Amazon EKS cluster, Amazon VPC CNI plugin is used which is an opensource implementation. This VPC CNI plugin allocates the IP address to pods on each node from the VPC CIDR range.

In the above sample Amazon EKS Cluster Architecture, the control plane of Kubernetes is managed by Amazon EKS in its own VPC and we will not have any access to it. Only the worker nodes (Data Plane of K8s) can be in a VPC which is managed by us.

The above VPC has a CIDR range 192.168.0.0/16 with two public subnets and two private one each in an availability zone, an Internet gateway attached to VPC, an Application Load Balancer which can distribute traffic to worker nodes and VPC endpoints which can route traffic to other AWS services.

The NAT gateways are in the public subnets which can be used by worker nodes are in private subnets to communicate to AWS Services and Internet.

Amazon EKS cluster is created with VPC CNI add-on which is represented at each worker node, and each worker node can have multiple Elastic Network Interfaces (ENIs)(one primary and others secondary).

The Story

Let’s say a global multi-national company which have thousands of applications running on on-premises Kubernetes installation have decided to move to AWS to take advantage of scalability, high-availability and also to save cost.

For large migrations like this, we need to meticulously plan and migrate the workloads to AWS, there are many AWS migration tools which will make our job easy which I will discuss in another blog, for this post let us concentrate on planning our Amazon EKS cluster from networking perspective.

Let us discuss three main things from networking perspective

When we add secondary VPC CIDR blocks we need to configure VPC CNI plugin in the Amazon EKS cluster so that they can be used for scheduling Pods. Amazon VPC supports RFC1918 and non-RFC 1918 CIDR blocks, so EKS Clusters in Amazon VPCs addressed with CIDR blocks in the 100.64.0.0/10 and 198.19.0.0/16 ranges with Hybrid networking models, i.e., if we would like to extend our EKS cluster workloads to on-premises data centres we can you those CIDR blocks along with CNI custom networking. We can conserve the RFC1918 IP space in a hybrid network by leveraging RFC6598 addresses.

1. Planning VPC CIDR Range

We should be planning a larger CIDR block for VPC with netmask of /16 which can provide up to 65536 IPs, this allows us to add new workloads for ever expanding business requirements. If we run out of IP space in this primary range then we can add secondary CIDR blocks to the VPC. We can add up to 4 additional CIDR blocks by default and request a quota increase up to 50 including primary CIDR block. Even though we can have 50 CIDR blocks we should be aware that there is a Network Address Usage (NAU) limit which is metric applied to resources in VPC, by default NAU units per VPC will be 64000 and the quota can be increased up to 256000. So practically we can have only 4 CIDR Blocks with netmask of /16.

2. Understanding Subnets and ENIs

The CIDR range of public subnets can be smaller with netmasks like /27 and /24. We can plan public subnets to be smaller in size to host only NAT Gateways and bastion hosts, at the same time we need to have at least 6 free IPs which can be consumed by Amazon EKS for its internal use, this can be for creating network interfaces by Amazon EKS in the subnets.

We don’t want to expose our application workloads to public internet so mostly we will be hosting our workloads in private subnets which should be larger in size. In the example architecture above we have use netmask /20 for private subnets which can support 4096 IPs (few of them will be reserved for internal use).

In a worker node, the VPC CNI plugin automatically allocates Secondary ENIs (Elastic Network Interfaces) when the IP addresses from the Primary Network Interface get exhausted. Secondary Network Interfaces created by VPC CNI Plugin will be in the same subnet as Primary Network Interface by default, some times if there are not enough IP addresses in the Primary Interface Subnet, we may have to use a different Subnet for Secondary ENIs, this can be done by Custom Networking with VPC CNI plugin.

However, we need to be aware that when we enable custom networking, IP addresses from Primary ENI will not be assigned to Pods, so multiple secondary ENIs with different subnets will be helpful but we will be wasting one ENI that is the primary ENI.

3. Choosing the right EC2 Instance types for worker nodes

The number of Secondary ENIs attached to the EC2 Instances (worker nodes) depends on the type of the EC2 Instances. Each EC2 instance type has a maximum number network interfaces that it can support and also there a maximum limit of Private IPv4 addresses which a network interface can handle again based on EC2 Instance type.

We need to analyse the CPU and memory requirements of Pods hosting our application containers and number of Pods which will be scaled during minimum and maximum traffic periods. Then we need to analyse how many EC2 instances will be scaled.

Based on these factors including the ENIs and IPs which the instance can handle we need to choose the right type of Instance.

Pods scaling and Instance scaling in an EKS cluster is a big topic which I will be discussing in a separate blog, one thing we need to keep in mind is EC2 Instance type also affects the IP address allocation to Pods.

4. Managing the ENIs and IP allocation to Pods

We might go for smaller instance types and small clusters to save cost or may be various other business reasons, it becomes very important to manage the IPs in the small clusters to save cost, for this we need to understand how VPC CNI works.

VPC CNI has two components, aws-cni and ipamd (IP address management daemon). aws-cni is responsible for setting up Pod-to-Pod communication network and ipamd is a daemon in the Kubernetes which is responsible for managing ENIs and a warm-pool of IPs.

To scale out Pods quickly it is necessary to maintain a warm pool of IPs because provisioning a new ENI and attaching it to an EC2 instance can take some time, so ipamd attaches an ENI in advance and maintain a warm pool of IPs.

Let us understand how ipamd allocates ENI and IPs with an example

Let’s say we have planned for M5.4xLarge instance in the subnet which have 256 IP range. Each M5.4xLarge instance can support up to 8 ENIs and each ENI can support up to 30 IPs. Out of 30 IPs one IP will be reserved for internal use.

When the instance is added to EKS cluster, it starts with one ENI which is primary, if the number of Pods running in the instance is between 0 to 29 then to keep a warm pool of IPs ipamd requests EC2 service to allocate one more secondary ENI, so that the total ENIs will be 2 and warm pool of IPs available in the starting will be 58 for the Instance, when the number of running Pods becomes 30 (>29) then ipamd will request for one more ENI which will increase total ENIs to 3 and IPs for the instance to 87. This can increase so on based on number of running Pods and till the EC2 instance supports ENI.

So, the formula for number of IPs is

the number of ENIs for the instance type × (the number of IPs per ENI – 1))

Here in this case even though number of Pods running in a Node is only 30, the IPs allocated to that node is 87. The free IPs is 87-30 = 57 which might not be used in that instance, this also leads to situation that, one instance in the subnet get more of IPs and other instances in the same subnet will starve for IPs.

In the instance which got more IPs, other resources like vCPU and Memory can become less which are utilized by already running Pods and other instances which have free vCPU and Memory may not have sufficient IPs to run the Pods. This can create an imbalance in distribution of Pods between instances and wastage of resources at the same time escalating the cost.

To manage such situations, we can define CNI configuration variables WARM_ENI_TARGET, WARM_IP_TARGET and MINIMUM_IP_TARGET.

WARM_ENI_TARGET is 1 by default, so we need touch it.

With WARM_IP_TARGET we can define the number of free IP addresses ipamd should maintain.

With MINIMUM_IP_TARGET we can define the total number of IPs allocated to Pods.

It is always recommended to use MINIMUM_IP_TARGET and WARM_IP_TARGET together, which will reduce the API calls made by ipamd to EC2, which can slow down the system.

In the above example we can define MINIMUM_IP_TARGET = 30 and WARM_IP_TARGET = 2, this will make the ipamd to maintain 2 warm-pool of IPs after 30 Pods are running. This will give space for other Instances in the subnet to consume the available IPs.

Increasing the Pod Density per Node

To increase Pod density per node we can enable Prefix mode with which we can increase the number of IPs allocated to Node by keeping the maximum number of ENIs same per instance. This can reduce the cost bi bin packing the Pods running per instance and use a smaller number of instances. However we need to be aware that this can affect the high availability of our applications which may need to run in multiple availability zones for high availability.